企业级日志平台新秀Grafana Loki,比ELK轻量多了~

来源博客:https://blog.csdn.net/Linkthaha

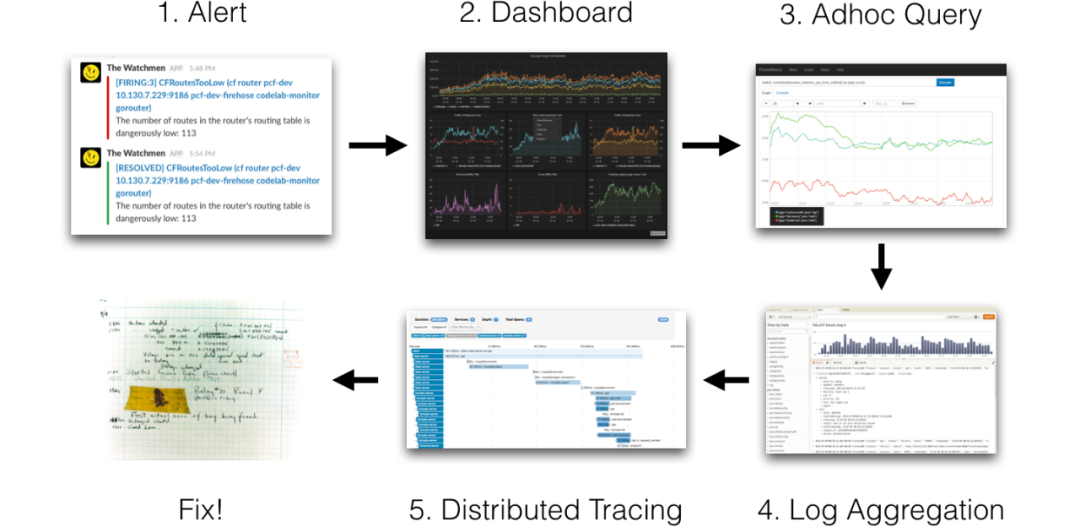

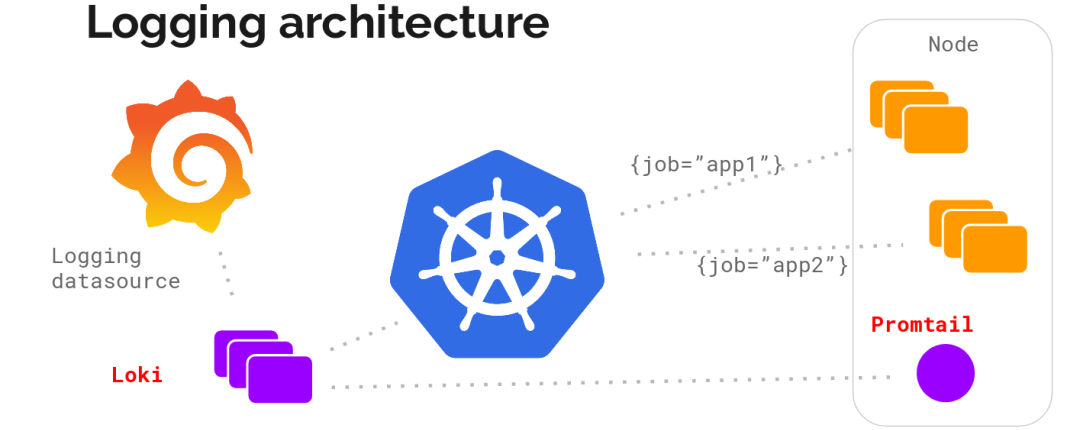

我们的监控使用的是基于Prometheus体系进行改造的,Prometheus中比较重要的是Metric和Alert,Metric是来说明当前或者历史达到了某个值,Alert设置Metric达到某个特定的基数触发了告警,但是这些信息明显是不够的。我们都知道,Kubernetes的基本单位是Pod,Pod把日志输出到stdout和stderr,平时有什么问题我们通常在界面或者通过命令查看相关的日志,举个例子:当我们的某个Pod的内存变得很大,触发了我们的Alert,这个时候管理员,去页面查询确认是哪个Pod有问题,然后要确认Pod内存变大的原因,我们还需要去查询Pod的日志,如果没有日志系统,那么我们就需要到页面或者使用命令进行查询了:

oc new-project loki

oc adm policy add-scc-to-user anyuid -z default -n loki

oc adm policy add-cluster-role-to-user cluster-admin system:serviceaccount:loki:default

oc create -f statefulset.json -n loki

{

"apiVersion": "apps/v1",

"kind": "StatefulSet",

"metadata": {

"name": "loki"

},

"spec": {

"podManagementPolicy": "OrderedReady",

"replicas": 1,

"revisionHistoryLimit": 10,

"selector": {

"matchLabels": {

"app": "loki"

}

},

"serviceName": "womping-stoat-loki-headless",

"template": {

"metadata": {

"annotations": {

"checksum/config": "da297d66ee53e0ce68b58e12be7ec5df4a91538c0b476cfe0ed79666343df72b",

"prometheus.io/port": "http-metrics",

"prometheus.io/scrape": "true"

},

"creationTimestamp": null,

"labels": {

"app": "loki",

"name": "loki"

}

},

"spec": {

"affinity": {},

"containers": [

{

"args": [

"-config.file=/etc/loki/local-config.yaml"

],

"image": "grafana/loki:latest",

"imagePullPolicy": "IfNotPresent",

"livenessProbe": {

"failureThreshold": 3,

"httpGet": {

"path": "/ready",

"port": "http-metrics",

"scheme": "HTTP"

},

"initialDelaySeconds": 45,

"periodSeconds": 10,

"successThreshold": 1,

"timeoutSeconds": 1

},

"name": "loki",

"ports": [

{

"containerPort": 3100,

"name": "http-metrics",

"protocol": "TCP"

}

],

"readinessProbe": {

"failureThreshold": 3,

"httpGet": {

"path": "/ready",

"port": "http-metrics",

"scheme": "HTTP"

},

"initialDelaySeconds": 45,

"periodSeconds": 10,

"successThreshold": 1,

"timeoutSeconds": 1

},

"resources": {},

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"volumeMounts": [

{

"mountPath": "/tmp/loki",

"name": "storage"

}

]

}

],

"dnsPolicy": "ClusterFirst",

"restartPolicy": "Always",

"schedulerName": "default-scheduler",

"terminationGracePeriodSeconds": 30,

"volumes": [

{

"emptyDir": {},

"name": "storage"

}

]

}

},

"updateStrategy": {

"type": "RollingUpdate"

}

}

}

oc create -f configmap.json -n loki

{

"apiVersion": "v1",

"data": {

"promtail.yaml": "client:\n backoff_config:\n maxbackoff: 5s\n maxretries: 5\n minbackoff: 100ms\n batchsize: 102400\n batchwait: 1s\n external_labels: {}\n timeout: 10s\npositions:\n filename: /run/promtail/positions.yaml\nserver:\n http_listen_port: 3101\ntarget_config:\n sync_period: 10s\n\nscrape_configs:\n- job_name: kubernetes-pods-name\n pipeline_stages:\n - docker: {}\n \n kubernetes_sd_configs:\n - role: pod\n relabel_configs:\n - source_labels:\n - __meta_kubernetes_pod_label_name\n target_label: __service__\n - source_labels:\n - __meta_kubernetes_pod_node_name\n target_label: __host__\n - action: drop\n regex: ^$\n source_labels:\n - __service__\n - action: labelmap\n regex: __meta_kubernetes_pod_label_(.+)\n - action: replace\n replacement: $1\n separator: /\n source_labels:\n - __meta_kubernetes_namespace\n - __service__\n target_label: job\n - action: replace\n source_labels:\n - __meta_kubernetes_namespace\n target_label: namespace\n - action: replace\n source_labels:\n - __meta_kubernetes_pod_name\n target_label: instance\n - action: replace\n source_labels:\n - __meta_kubernetes_pod_container_name\n target_label: container_name\n - replacement: /var/log/pods/*$1/*.log\n separator: /\n source_labels:\n - __meta_kubernetes_pod_uid\n - __meta_kubernetes_pod_container_name\n target_label: __path__\n- job_name: kubernetes-pods-app\n pipeline_stages:\n - docker: {}\n \n kubernetes_sd_configs:\n - role: pod\n relabel_configs:\n - action: drop\n regex: .+\n source_labels:\n - __meta_kubernetes_pod_label_name\n - source_labels:\n - __meta_kubernetes_pod_label_app\n target_label: __service__\n - source_labels:\n - __meta_kubernetes_pod_node_name\n target_label: __host__\n - action: drop\n regex: ^$\n source_labels:\n - __service__\n - action: labelmap\n regex: __meta_kubernetes_pod_label_(.+)\n - action: replace\n replacement: $1\n separator: /\n source_labels:\n - __meta_kubernetes_namespace\n - __service__\n target_label: job\n - action: replace\n source_labels:\n - __meta_kubernetes_namespace\n target_label: namespace\n - action: replace\n source_labels:\n - __meta_kubernetes_pod_name\n target_label: instance\n - action: replace\n source_labels:\n - __meta_kubernetes_pod_container_name\n target_label: container_name\n - replacement: /var/log/pods/*$1/*.log\n separator: /\n source_labels:\n - __meta_kubernetes_pod_uid\n - __meta_kubernetes_pod_container_name\n target_label: __path__\n- job_name: kubernetes-pods-direct-controllers\n pipeline_stages:\n - docker: {}\n \n kubernetes_sd_configs:\n - role: pod\n relabel_configs:\n - action: drop\n regex: .+\n separator: ''\n source_labels:\n - __meta_kubernetes_pod_label_name\n - __meta_kubernetes_pod_label_app\n - action: drop\n regex: ^([0-9a-z-.]+)(-[0-9a-f]{8,10})$\n source_labels:\n - __meta_kubernetes_pod_controller_name\n - source_labels:\n - __meta_kubernetes_pod_controller_name\n target_label: __service__\n - source_labels:\n - __meta_kubernetes_pod_node_name\n target_label: __host__\n - action: drop\n regex: ^$\n source_labels:\n - __service__\n - action: labelmap\n regex: __meta_kubernetes_pod_label_(.+)\n - action: replace\n replacement: $1\n separator: /\n source_labels:\n - __meta_kubernetes_namespace\n - __service__\n target_label: job\n - action: replace\n source_labels:\n - __meta_kubernetes_namespace\n target_label: namespace\n - action: replace\n source_labels:\n - __meta_kubernetes_pod_name\n target_label: instance\n - action: replace\n source_labels:\n - __meta_kubernetes_pod_container_name\n target_label: container_name\n - replacement: /var/log/pods/*$1/*.log\n separator: /\n source_labels:\n - __meta_kubernetes_pod_uid\n - __meta_kubernetes_pod_container_name\n target_label: __path__\n- job_name: kubernetes-pods-indirect-controller\n pipeline_stages:\n - docker: {}\n \n kubernetes_sd_configs:\n - role: pod\n relabel_configs:\n - action: drop\n regex: .+\n separator: ''\n source_labels:\n - __meta_kubernetes_pod_label_name\n - __meta_kubernetes_pod_label_app\n - action: keep\n regex: ^([0-9a-z-.]+)(-[0-9a-f]{8,10})$\n source_labels:\n - __meta_kubernetes_pod_controller_name\n - action: replace\n regex: ^([0-9a-z-.]+)(-[0-9a-f]{8,10})$\n source_labels:\n - __meta_kubernetes_pod_controller_name\n target_label: __service__\n - source_labels:\n - __meta_kubernetes_pod_node_name\n target_label: __host__\n - action: drop\n regex: ^$\n source_labels:\n - __service__\n - action: labelmap\n regex: __meta_kubernetes_pod_label_(.+)\n - action: replace\n replacement: $1\n separator: /\n source_labels:\n - __meta_kubernetes_namespace\n - __service__\n target_label: job\n - action: replace\n source_labels:\n - __meta_kubernetes_namespace\n target_label: namespace\n - action: replace\n source_labels:\n - __meta_kubernetes_pod_name\n target_label: instance\n - action: replace\n source_labels:\n - __meta_kubernetes_pod_container_name\n target_label: container_name\n - replacement: /var/log/pods/*$1/*.log\n separator: /\n source_labels:\n - __meta_kubernetes_pod_uid\n - __meta_kubernetes_pod_container_name\n target_label: __path__\n- job_name: kubernetes-pods-static\n pipeline_stages:\n - docker: {}\n \n kubernetes_sd_configs:\n - role: pod\n relabel_configs:\n - action: drop\n regex: ^$\n source_labels:\n - __meta_kubernetes_pod_annotation_kubernetes_io_config_mirror\n - action: replace\n source_labels:\n - __meta_kubernetes_pod_label_component\n target_label: __service__\n - source_labels:\n - __meta_kubernetes_pod_node_name\n target_label: __host__\n - action: drop\n regex: ^$\n source_labels:\n - __service__\n - action: labelmap\n regex: __meta_kubernetes_pod_label_(.+)\n - action: replace\n replacement: $1\n separator: /\n source_labels:\n - __meta_kubernetes_namespace\n - __service__\n target_label: job\n - action: replace\n source_labels:\n - __meta_kubernetes_namespace\n target_label: namespace\n - action: replace\n source_labels:\n - __meta_kubernetes_pod_name\n target_label: instance\n - action: replace\n source_labels:\n - __meta_kubernetes_pod_container_name\n target_label: container_name\n - replacement: /var/log/pods/*$1/*.log\n separator: /\n source_labels:\n - __meta_kubernetes_pod_annotation_kubernetes_io_config_mirror\n - __meta_kubernetes_pod_container_name\n target_label: __path__\n"

},

"kind": "ConfigMap",

"metadata": {

"creationTimestamp": "2019-09-05T01:05:03Z",

"labels": {

"app": "promtail",

"chart": "promtail-0.12.0",

"heritage": "Tiller",

"release": "lame-zorse"

},

"name": "lame-zorse-promtail",

"namespace": "loki",

"resourceVersion": "17921611",

"selfLink": "/api/v1/namespaces/loki/configmaps/lame-zorse-promtail",

"uid": "30fcb896-cf79-11e9-b58e-e4a8b6cc47d2"

}

}

oc create -f daemonset.json -n loki

{

"apiVersion": "apps/v1",

"kind": "DaemonSet",

"metadata": {

"annotations": {

"deployment.kubernetes.io/revision": "2"

},

"creationTimestamp": "2019-09-05T01:16:37Z",

"generation": 2,

"labels": {

"app": "promtail",

"chart": "promtail-0.12.0",

"heritage": "Tiller",

"release": "lame-zorse"

},

"name": "lame-zorse-promtail",

"namespace": "loki"

},

"spec": {

"progressDeadlineSeconds": 600,

"replicas": 1,

"revisionHistoryLimit": 10,

"selector": {

"matchLabels": {

"app": "promtail",

"release": "lame-zorse"

}

},

"strategy": {

"rollingUpdate": {

"maxSurge": 1,

"maxUnavailable": 1

},

"type": "RollingUpdate"

},

"template": {

"metadata": {

"annotations": {

"checksum/config": "75a25ee4f2869f54d394bf879549a9c89c343981a648f8d878f69bad65dba809",

"prometheus.io/port": "http-metrics",

"prometheus.io/scrape": "true"

},

"creationTimestamp": null,

"labels": {

"app": "promtail",

"release": "lame-zorse"

}

},

"spec": {

"affinity": {},

"containers": [

{

"args": [

"-config.file=/etc/promtail/promtail.yaml",

"-client.url=http://loki.loki.svc:3100/api/prom/push"

],

"env": [

{

"name": "HOSTNAME",

"valueFrom": {

"fieldRef": {

"apiVersion": "v1",

"fieldPath": "spec.nodeName"

}

}

}

],

"image": "grafana/promtail:v0.3.0",

"imagePullPolicy": "IfNotPresent",

"name": "promtail",

"ports": [

{

"containerPort": 3101,

"name": "http-metrics",

"protocol": "TCP"

}

],

"readinessProbe": {

"failureThreshold": 5,

"httpGet": {

"path": "/ready",

"port": "http-metrics",

"scheme": "HTTP"

},

"initialDelaySeconds": 10,

"periodSeconds": 10,

"successThreshold": 1,

"timeoutSeconds": 1

},

"resources": {},

"securityContext": {

"readOnlyRootFilesystem": true,

"runAsUser": 0

},

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"volumeMounts": [

{

"mountPath": "/etc/promtail",

"name": "config"

},

{

"mountPath": "/run/promtail",

"name": "run"

},

{

"mountPath": "/var/lib/docker/containers",

"name": "docker",

"readOnly": true

},

{

"mountPath": "/var/log/pods",

"name": "pods",

"readOnly": true

}

]

}

],

"dnsPolicy": "ClusterFirst",

"restartPolicy": "Always",

"schedulerName": "default-scheduler",

"securityContext": {},

"terminationGracePeriodSeconds": 30,

"volumes": [

{

"configMap": {

"defaultMode": 420,

"name": "lame-zorse-promtail"

},

"name": "config"

},

{

"hostPath": {

"path": "/run/promtail",

"type": ""

},

"name": "run"

},

{

"hostPath": {

"path": "/var/lib/docker/containers",

"type": ""

},

"name": "docker"

},

{

"hostPath": {

"path": "/var/log/pods",

"type": ""

},

"name": "pods"

}

]

}

}

}

}

oc create -f service.json -n loki

{

"apiVersion": "v1",

"kind": "Service",

"metadata": {

"creationTimestamp": "2019-09-04T09:37:49Z",

"name": "loki",

"namespace": "loki",

"resourceVersion": "17800188",

"selfLink": "/api/v1/namespaces/loki/services/loki",

"uid": "a87fe237-cef7-11e9-b58e-e4a8b6cc47d2"

},

"spec": {

"externalTrafficPolicy": "Cluster",

"ports": [

{

"name": "lokiport",

"port": 3100,

"protocol": "TCP",

"targetPort": 3100

}

],

"selector": {

"app": "loki"

},

"sessionAffinity": "None",

"type": "NodePort"

},

"status": {

"loadBalancer": {}

}

curl http://192.168.25.30:30972/api/prom/label

{

"values": ["alertmanager", "app", "component", "container_name", "controller_revision_hash", "deployment", "deploymentconfig", "docker_registry", "draft", "filename", "instance", "job", "logging_infra", "metrics_infra", "name", "namespace", "openshift_io_component", "pod_template_generation", "pod_template_hash", "project", "projectname", "prometheus", "provider", "release", "router", "servicename", "statefulset_kubernetes_io_pod_name", "stream", "tekton_dev_pipeline", "tekton_dev_pipelineRun", "tekton_dev_pipelineTask", "tekton_dev_task", "tekton_dev_taskRun", "type", "webconsole"]

}

curl http://192.168.25.30:30972/api/prom/label/namespace/values

{"values":["cicd","default","gitlab","grafanaserver","jenkins","jx-staging","kube-system","loki","mysql-exporter","new2","openshift-console","openshift-infra","openshift-logging","openshift-monitoring","openshift-node","openshift-sdn","openshift-web-console","tekton-pipelines","test111"]}

http://192.168.25.30:30972/api/prom/query?direction=BACKWARD&limit=1000®exp=&query={namespace="cicd"}&start=1567644457221000000&end=1567730857221000000&refId=A

query:一种查询语法详细见下面章节,{name=~“mysql.+”} or {namespace=“cicd”} |= "error"表示查询,namespace为CI/CD的日志中,有error字样的信息。

limit:返回日志的数量

start:开始时间,Unix时间表示方法 默认为,一小时前时间

end:结束时间,默认为当前时间

direction:forward或者backward,指定limit时候有用,默认为 backward

regexp:对结果进行regex过滤

{app="mysql",name="mysql-backup"}

=:完全相同。

!=:不平等。

=~:正则表达式匹配。

!~:不要正则表达式匹配。

{job=“mysql”} |= “error”

{name=“kafka”} |~ “tsdb-ops.*io:2003”

{instance=~“kafka-[23]”,name=“kafka”} != kafka.server:type=ReplicaManager

{job=“mysql”} |= “error” != “timeout”

|= line包含字符串。

!= line不包含字符串。

|~ line匹配正则表达式。

!~ line与正则表达式不匹配。

- END -

推荐阅读 Kubernetes 企业容器云平台运维实战 Kubernetes 的这些核心资源原理,你一定要了解 终于搞懂了服务器为啥产生大量的TIME_WAIT! Kubernetes 网络方案之炫酷的 Cilium Prometheus+InfluxDB+Grafana 打造高逼格监控平台 民生银行 IT运维故障管理 可视化案例 Kubernetes YAML 学习,提升编写能力 这些 K8S 日常故障处理集锦,运维请收藏~ 猪八戒网 CI/CD 最佳实践之路 从零开始搭建创业公司DevOps技术栈 12年资深运维老司机的成长感悟 搭建一套完整的企业级 K8s 集群(v1.20,二进制方式)

点亮,服务器三年不宕机