1款开源工具,实现自动化升级K3S集群!

即便你的集群能够平稳运行,Kubernetes升级依旧是一项艰难的任务。由于每3个月Kubernetes会发布一个新版本,所以升级是十分必要的。如果一年内你不升级你的Kubernetes集群,你就会落后许多。Rancher致力于解决开发运维人员的痛点,于是创建了新的开源项目System Upgrade Controller可以帮助开发人员平滑升级。

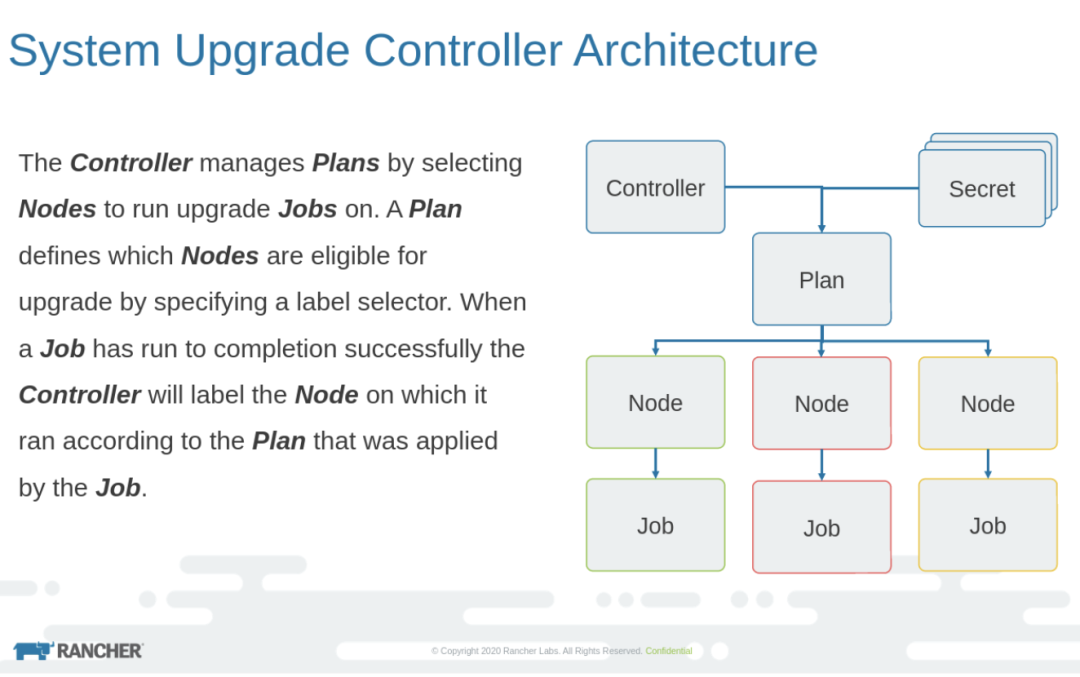

System Upgrade Controller引入了一个新的Kubernetes自定义资源定义(CRD),称为Plan。现在Plan是处理升级进程的主要组件。以下是从git repo获取的架构图:

使用System Upgrade Controller

自动升级K3s

升级K3s Kubernetes集群有两个主要要求:

CRD安装

创建Plan

首先,让我们检查当前正在运行的K3s集群版本。

运行以下命令,即可快速安装:

curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION=v1.16.3-k3s.2 shK3S_TOKEN is created at /var/lib/rancher/k3s/server/node-token on the server.For adding nodes, K3S_URL and K3S_TOKEN needs to be passed:curl -sfL https://get.k3s.io | K3S_URL=https://myserver:6443 K3S_TOKEN=XXX sh -KUBECONFIG file is create at /etc/rancher/k3s/k3s.yaml location

kubectl get nodesNAME STATUS ROLES AGE VERSIONkube-node-c155 Ready <none> 25h v1.16.3-k3s.2kube-node-2404 Ready <none> 25h v1.16.3-k3s.2kube-master-303d Ready master 25h v1.16.3-k3s.2

现在,我们部署CRD:

apiVersion: v1kind: Namespacemetadata:name: system-upgrade---apiVersion: v1kind: ServiceAccountmetadata:name: system-upgradenamespace: system-upgrade---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: system-upgraderoleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: cluster-adminsubjects:kind: ServiceAccountname: system-upgradenamespace: system-upgrade---apiVersion: v1kind: ConfigMapmetadata:name: default-controller-envnamespace: system-upgradedata:SYSTEM_UPGRADE_CONTROLLER_DEBUG: "false"SYSTEM_UPGRADE_CONTROLLER_THREADS: "2"SYSTEM_UPGRADE_JOB_ACTIVE_DEADLINE_SECONDS: "900"SYSTEM_UPGRADE_JOB_BACKOFF_LIMIT: "99"SYSTEM_UPGRADE_JOB_IMAGE_PULL_POLICY: "Always"SYSTEM_UPGRADE_JOB_KUBECTL_IMAGE: "rancher/kubectl:v1.18.3"SYSTEM_UPGRADE_JOB_PRIVILEGED: "true"SYSTEM_UPGRADE_JOB_TTL_SECONDS_AFTER_FINISH: "900"SYSTEM_UPGRADE_PLAN_POLLING_INTERVAL: "15m"---apiVersion: apps/v1kind: Deploymentmetadata:name: system-upgrade-controllernamespace: system-upgradespec:selector:matchLabels:: system-upgrade-controllertemplate:metadata:labels:: system-upgrade-controller # necessary to avoid drainspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:matchExpressions:{key: "node-role.kubernetes.io/master", operator: In, values: ["true"]}serviceAccountName: system-upgradetolerations:key: "CriticalAddonsOnly"operator: "Exists"key: "node-role.kubernetes.io/master"operator: "Exists"effect: "NoSchedule"containers:name: system-upgrade-controllerimage: rancher/system-upgrade-controller:v0.5.0imagePullPolicy: IfNotPresentenvFrom:configMapRef:name: default-controller-envenv:name: SYSTEM_UPGRADE_CONTROLLER_NAMEvalueFrom:fieldRef:fieldPath: metadata.labels['upgrade.cattle.io/controller']name: SYSTEM_UPGRADE_CONTROLLER_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:name: etc-sslmountPath: /etc/sslname: tmpmountPath: /tmpvolumes:name: etc-sslhostPath:path: /etc/ssltype: Directoryname: tmpemptyDir: {}

将上面的yaml分解,它将创建以下组件:

system-upgrade命名空间

system-upgrade服务账户

system-upgrade ClusterRoleBinding

用于设置容器中环境变量的config map

实际部署

现在,我们来部署yaml:

#Get the Lateest release tagcurl -s "https://api.github.com/repos/rancher/system-upgrade-controller/releases/latest" | awk -F '"' '/tag_name/{print $4}'v0.6.2# Apply the controller manifestkubectl apply -f https://raw.githubusercontent.com/rancher/system-upgrade-controller/v0.6.2/manifests/system-upgrade-controller.yamlnamespace/system-upgrade createdserviceaccount/system-upgrade createdclusterrolebinding.rbac.authorization.k8s.io/system-upgrade createdconfigmap/default-controller-env createddeployment.apps/system-upgrade-controller created# Verify everything is runningkubectl get all -n system-upgradeNAME READY STATUS RESTARTS AGEpod/system-upgrade-controller-7fff98589f-blcxs 1/1 Running 0 5m26sNAME READY UP-TO-DATE AVAILABLE AGEdeployment.apps/system-upgrade-controller 1/1 1 1 5m28sNAME DESIRED CURRENT READY AGEreplicaset.apps/system-upgrade-controller-7fff98589f 1 1 1 5m28s

创建一个K3s升级Plan

现在,是时候创建一个升级Plan。我们将使用在Git repo示例文件夹中提到的示例Plan。

---apiVersion: upgrade.cattle.io/v1kind: Planmetadata:name: k3s-servernamespace: system-upgradelabels:: serverspec:concurrency: 1version: v1.17.4+k3s1nodeSelector:matchExpressions:{key: k3s-upgrade, operator: Exists}{key: k3s-upgrade, operator: NotIn, values: ["disabled", "false"]}{key: k3s.io/hostname, operator: Exists}{key: k3os.io/mode, operator: DoesNotExist}{key: node-role.kubernetes.io/master, operator: In, values: ["true"]}serviceAccountName: system-upgradecordon: true# drain:# force: trueupgrade:image: rancher/k3s-upgrade---apiVersion: upgrade.cattle.io/v1kind: Planmetadata:name: k3s-agentnamespace: system-upgradelabels:: agentspec:concurrency: 2version: v1.17.4+k3s1nodeSelector:matchExpressions:{key: k3s-upgrade, operator: Exists}{key: k3s-upgrade, operator: NotIn, values: ["disabled", "false"]}{key: k3s.io/hostname, operator: Exists}{key: k3os.io/mode, operator: DoesNotExist}{key: node-role.kubernetes.io/master, operator: NotIn, values: ["true"]}serviceAccountName: system-upgradeprepare:# Since v0.5.0-m1 SUC will use the resolved version of the plan for the tag on the prepare container.# image: rancher/k3s-upgrade:v1.17.4-k3s1image: rancher/k3s-upgradeargs: ["prepare", "k3s-server"]drain:force: trueupgrade:image: rancher/k3s-upgrade

拆解以上yaml,它将创建:

与表达式匹配的Plan,以了解需要升级的内容。所以在上述例子中,我们有2个plan:k3s-server和k3s-agent。node-role.kubernetes.io/master为true和k3s-upgrade的节点将被server Plan占用。带false的将由client Plan占用。所以标签必须要设置正确。接下来,我们来apply Plan。

#Set the Node Labelskubectl label node kube-master-303d node-role.kubernetes.io/master=true# Apply the plan manifestkubectl apply -f https://raw.githubusercontent.com/rancher/system-upgrade-controller/master/examples/k3s-upgrade.yamlplan.upgrade.cattle.io/k3s-server createdplan.upgrade.cattle.io/k3s-agent created# We see that the jobs have startedkubectl get jobs -n system-upgradeNAME COMPLETIONS DURATION AGEapply-k3s-server-on-kube-master-303d-with-9efdeac5f6ede78-125aa 0/1 40s 40sapply-k3s-agent-on-kube-node-2404-with-9efdeac5f6ede78917-07df3 0/1 39s 39sapply-k3s-agent-on-kube-node-c155-with-9efdeac5f6ede78917-9a585 0/1 39s 39s# Upgrade in-progress, completed on the `node-role.kubernetes.io/master=true` nodekubectl get nodesNAME STATUS ROLES AGE VERSIONkube-node-2404 Ready,SchedulingDisabled <none> 26h v1.16.3-k3s.2kube-node-c155 Ready,SchedulingDisabled <none> 26h v1.16.3-k3s.2kube-master-303d Ready master 26h v1.17.4+k3s1# In a few minutes all nodes get upgraded to latest version as per the plankubectl get nodesNAME STATUS ROLES AGE VERSIONkube-node-2404 Ready <none> 26h v1.17.4+k3s1kube-node-c155 Ready <none> 26h v1.17.4+k3s1kube-master-303d Ready master 26h v1.17.4+k3s1

我们的K3s Kubernetes升级完成!极为轻松而且十分顺利。Project可以更新底层操作系统并重启节点。欢迎尝试哟!

Github地址:

https://github.com/rancher/system-upgrade-controller

推荐阅读

扫码添加k3s中文社区助手

加入官方中文技术社区

官网:https://k3s.io