垃圾分类的正确姿势?用 OpenCV 人工智能图像识别技术来进行

点击蓝色“Python交流圈”关注我丫

加个“星标”,每天一起进步一点点

OpenCV是一款非常强大的图像处理工具,对于从事图像处理领域相关工作的人来说这个可以说是必不可少的一项工具,用起来也很方面,下吗是一段简单的介绍:

OpenCV是一个基于BSD许可(开源)发行的跨平台计算机视觉和机器学习软件库,可以运行在Linux、Windows、Android和Mac OS操作系统上。它轻量级而且高效——由一系列 C 函数和少量 C++ 类构成,同时提供了Python、Ruby、MATLAB等语言的接口,实现了图像处理和计算机视觉方面的很多通用算法。OpenCV用C++语言编写,它具有C ++,Python,Java和MATLAB接口,并支持Windows,Linux,Android和Mac OS,OpenCV主要倾向于实时视觉应用,并在可用时利用MMX和SSE指令, 如今也提供对于C#、Ch、Ruby,GO的支持。

https://opencv.org/,首页截图如下所示:

https://docs.opencv.org/master/d9/df8/tutorial_root.html

https://docs.opencv.org/

https://www.zhihu.com/question/26881367

RGB颜色空间:

R:Red 红色

G:Green 绿色

B:Blue 蓝色

HSV颜色空间:

H:Hue 色度

S:Saturation 饱和度

V:Value 亮度

HSI颜色空间:

H:Hue 色度

S:Saturation 饱和度

I:Intensity 强度

#!usr/bin/env python

# encoding:utf-8

from __future__ import division

'''

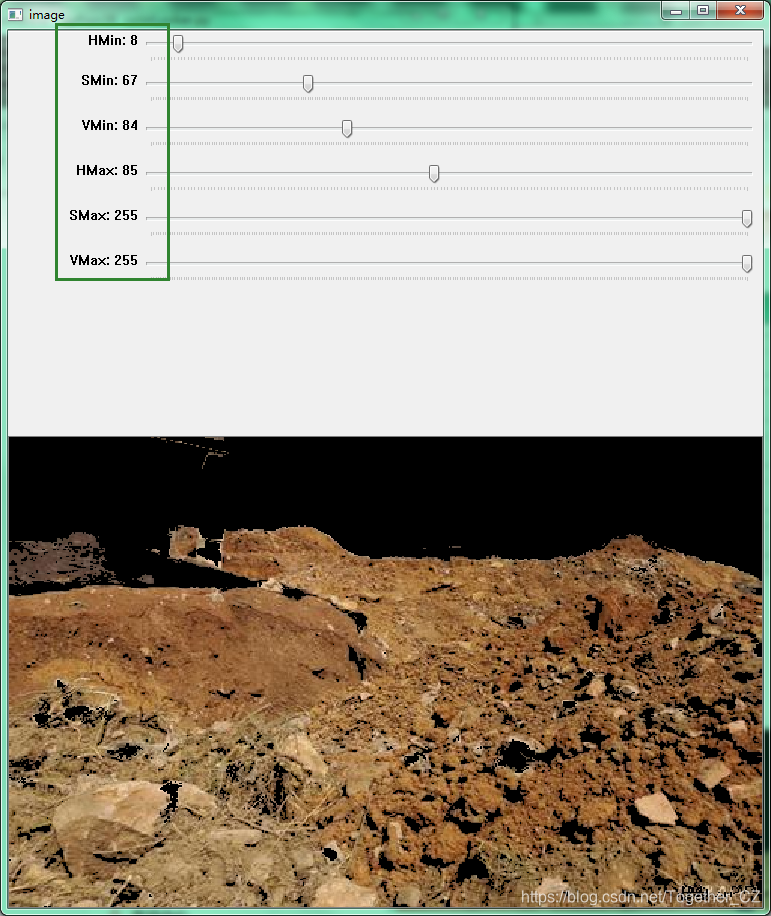

功能:HSV空间图片色素范围查看器

'''

import cv2

import numpy as np

def nothing(x):

pass

def colorLooker(pic='1.png'):

'''

HSV空间图片色素范围查看器

'''

#图像加载

image = cv2.imread(pic)

#窗口初始化

cv2.namedWindow('image',cv2.WINDOW_NORMAL)

#创建拖动条

#Opencv中Hue取值范围是0-179

cv2.createTrackbar('HMin', 'image', 0, 179, nothing)

cv2.createTrackbar('SMin', 'image', 0, 255, nothing)

cv2.createTrackbar('VMin', 'image', 0, 255, nothing)

cv2.createTrackbar('HMax', 'image', 0, 179, nothing)

cv2.createTrackbar('SMax', 'image', 0, 255, nothing)

cv2.createTrackbar('VMax', 'image', 0, 255, nothing)

#设置默认最大值

cv2.setTrackbarPos('HMax', 'image', 179)

cv2.setTrackbarPos('SMax', 'image', 255)

cv2.setTrackbarPos('VMax', 'image', 255)

#初始化设置

hMin = sMin = vMin = hMax = sMax = vMax = 0

phMin = psMin = pvMin = phMax = psMax = pvMax = 0

while(1):

#实时获取拖动条上的值

hMin = cv2.getTrackbarPos('HMin', 'image')

sMin = cv2.getTrackbarPos('SMin', 'image')

vMin = cv2.getTrackbarPos('VMin', 'image')

hMax = cv2.getTrackbarPos('HMax', 'image')

sMax = cv2.getTrackbarPos('SMax', 'image')

vMax = cv2.getTrackbarPos('VMax', 'image')

#设定HSV的最大和最小值

lower = np.array([hMin, sMin, vMin])

upper = np.array([hMax, sMax, vMax])

#BGR和HSV颜色空间转化处理

hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

mask = cv2.inRange(hsv, lower, upper)

result = cv2.bitwise_and(image, image, mask=mask)

#拖动改变阈值的同时,实时输出调整的信息

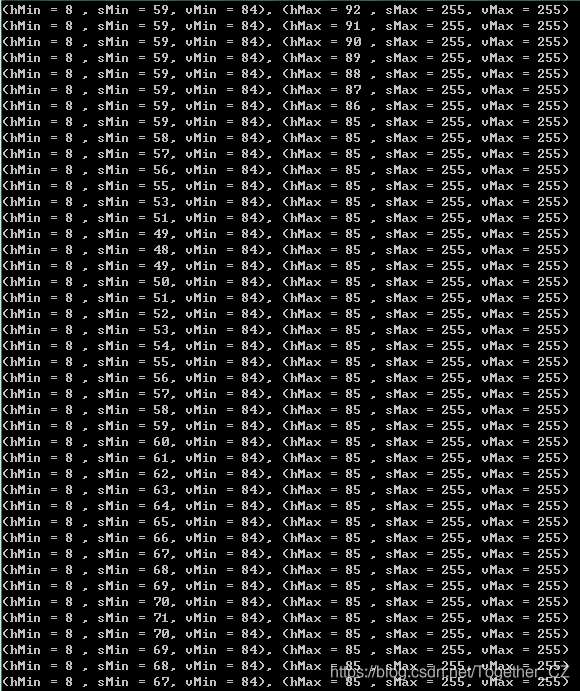

if((phMin != hMin) | (psMin != sMin) | (pvMin != vMin) | (phMax != hMax) | (psMax != sMax) | (pvMax != vMax) ):

print("(hMin = %d , sMin = %d, vMin = %d), (hMax = %d , sMax = %d, vMax = %d)" % (hMin , sMin , vMin, hMax, sMax , vMax))

phMin = hMin

psMin = sMin

pvMin = vMin

phMax = hMax

psMax = sMax

pvMax = vMax

#展示由色素带阈值范围处理过的结果图片

cv2.imshow('image', result)

if cv2.waitKey(10) & 0xFF == ord('q'):

break

cv2.destroyAllWindows()

if __name__ == '__main__':

colorLooker(pic='1.png')

img=Image.open('1.png')

img=cv2.cvtColor(np.asarray(img),cv2.COLOR_RGB2BGR)

frame=cv2.cvtColor(img, cv2.COLOR_BGR2HSV)

blur=cv2.GaussianBlur(frame, (21, 21), 0)

hsv=cv2.cvtColor(blur, cv2.COLOR_BGR2HSV)

h,w,way=img.shape

total=h*w

print('h: ', h, 'w: ', w, 'area: ', total)

#设置阈值数据

lower = [8, 67, 84]

upper = [85, 255, 255]

lower = np.array(lower, dtype="uint8")

upper = np.array(upper, dtype="uint8")

mask = cv2.inRange(hsv, lower, upper)

output = cv2.bitwise_and(hsv, hsv, mask=mask)

count = cv2.countNonZero(mask)

print('count: ', count)

now_ratio=round(int(count)/total,3)

print('now_ratio: ', now_ratio)

gray=cv2.cvtColor(output,cv2.COLOR_BGR2GRAY)

print('gray_shape: ', gray.shape)

ret,output=cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY)

'''

cv2.findContours:

在opencv中查找轮廓时,物体应该是白色而背景应该是黑色

contours, hierarchy = cv2.findContours(image,mode,method)

image:输入图像

mode:轮廓的模式。cv2.RETR_EXTERNAL只检测外轮廓;cv2.RETR_LIST检测的轮廓不建立等级关系;cv2.RETR_CCOMP建立两个等级的轮廓,上一层为外边界,内层为内孔的边界。如果内孔内还有连通物体,则这个物体的边界也在顶层;cv2.RETR_TREE建立一个等级树结构的轮廓。

method:轮廓的近似方法。cv2.CHAIN_APPROX_NOME存储所有的轮廓点,相邻的两个点的像素位置差不超过1;cv2.CHAIN_APPROX_SIMPLE压缩水平方向、垂直方向、对角线方向的元素,只保留该方向的终点坐标,例如一个矩形轮廓只需要4个点来保存轮廓信息;cv2.CHAIN_APPROX_TC89_L1,cv2.CV_CHAIN_APPROX_TC89_KCOS

contours:返回的轮廓

hierarchy:每条轮廓对应的属性

'''

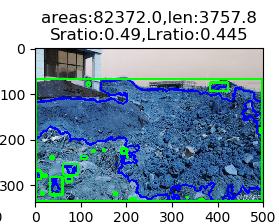

contours,hierarchy=cv2.findContours(output,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

print('contours_num: ', len(contours))

count_dict={}

areas,lengths=0,0

for i in range(len(contours)):

one=contours[i]

one_lk=one.tolist()

if len(one_lk)>=2:

area=cv2.contourArea(one)

length=cv2.arcLength(one, True)

areas+=area

lengths+=length

left_list,right_list=[O[0][0] for O in one_lk],[O[0][1] for O in one_lk]

minX,maxX,minY,maxY=min(left_list),max(left_list),min(right_list),max(right_list)

A=abs(maxY-minY)*abs(maxX-minX)

print('area: ', area, 'A: ', A, 'length: ', length)

count_dict[i]=[A,area,length,[minX,maxX,minY,maxY]]

sorted_list=sorted(count_dict.items(), key=lambda e:e[1][0], reverse=True)

print(sorted_list[:10])

result['value']=count_dict

cv2.drawContours(img,contours,-1,(0,0,255),3)

if sorted_list:

filter_list=filterBox(sorted_list[:5])

for one_box in filter_list:

print('one_box: ', one_box)

A,area,length,[minX,maxX,minY,maxY]=one_box

cv2.rectangle(img,(minX,maxY),(maxX,minY),(0,255,0),3)

('h: ', 336L, 'w: ', 500L, 'area: ', 168000L)

('count: ', 126387)

('now_ratio: ', 0.752)

('output_shape: ', (336L, 500L, 3L))

('gray_shape: ', (336L, 500L))

('contours_num: ', 64)

('area: ', 0.0, 'A: ', 2, 'length: ', 4.828427076339722)

('area: ', 0.0, 'A: ', 0, 'length: ', 4.0)

('area: ', 29.0, 'A: ', 44, 'length: ', 27.313708186149597)

('area: ', 42.5, 'A: ', 72, 'length: ', 34.72792184352875)

('area: ', 0.5, 'A: ', 6, 'length: ', 9.071067690849304)

('area: ', 0.0, 'A: ', 10, 'length: ', 11.656854152679443)

('area: ', 0.0, 'A: ', 1, 'length: ', 2.8284270763397217)

('area: ', 0.0, 'A: ', 2, 'length: ', 4.828427076339722)

('area: ', 1.5, 'A: ', 2, 'length: ', 5.414213538169861)

('area: ', 16.5, 'A: ', 36, 'length: ', 27.55634891986847)

('area: ', 5.0, 'A: ', 36, 'length: ', 37.79898953437805)

('area: ', 1.5, 'A: ', 3, 'length: ', 8.242640614509583)

('area: ', 0.0, 'A: ', 0, 'length: ', 2.0)

('area: ', 2.0, 'A: ', 2, 'length: ', 6.0)

('area: ', 360.0, 'A: ', 1026, 'length: ', 206.93607211112976)

('area: ', 44.0, 'A: ', 143, 'length: ', 59.94112479686737)

('area: ', 0.0, 'A: ', 1, 'length: ', 2.8284270763397217)

('area: ', 0.0, 'A: ', 1, 'length: ', 2.8284270763397217)

('area: ', 33.5, 'A: ', 60, 'length: ', 30.38477599620819)

('area: ', 76.5, 'A: ', 228, 'length: ', 63.35533845424652)

('area: ', 320.0, 'A: ', 792, 'length: ', 166.9949471950531)

('area: ', 16.0, 'A: ', 35, 'length: ', 21.313708305358887)

('area: ', 0.0, 'A: ', 8, 'length: ', 10.828427076339722)

('area: ', 21.0, 'A: ', 78, 'length: ', 37.79898953437805)

('area: ', 0.0, 'A: ', 1, 'length: ', 2.8284270763397217)

('area: ', 0.0, 'A: ', 2, 'length: ', 4.828427076339722)

('area: ', 0.0, 'A: ', 2, 'length: ', 4.828427076339722)

('area: ', 3.5, 'A: ', 25, 'length: ', 20.727921843528748)

('area: ', 1.5, 'A: ', 12, 'length: ', 13.071067690849304)

('area: ', 51.0, 'A: ', 121, 'length: ', 53.94112491607666)

('area: ', 0.0, 'A: ', 1, 'length: ', 2.8284270763397217)

('area: ', 32.5, 'A: ', 50, 'length: ', 27.899494767189026)

('area: ', 309.5, 'A: ', 722, 'length: ', 96.32590079307556)

('area: ', 34.0, 'A: ', 42, 'length: ', 22.485281229019165)

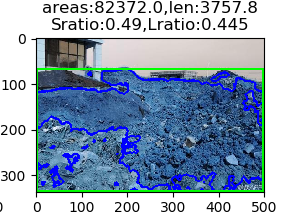

('area: ', 80970.5, 'A: ', 132699, 'length: ', 2718.5739262104034)

[(63, [132699, 80970.5, 2718.5739262104034, [1, 498, 67, 334]]), (24, [1026, 360.0, 206.93607211112976, [33, 60, 281, 319]]), (39, [792, 320.0, 166.9949471950531, [61, 94, 252, 276]]), (61, [722, 309.5, 96.32590079307556, [384, 422, 75, 94]]), (34, [228, 76.5, 63.35533845424652, [1, 13, 267, 286]]), (25, [143, 44.0, 59.94112479686737, [68, 81, 280, 291]]), (55, [121, 51.0, 53.94112491607666, [189, 200, 219, 230]]), (47, [78, 21.0, 37.79898953437805, [100, 113, 235, 241]]), (4, [72, 42.5, 34.72792184352875, [209, 221, 328, 334]]), (32, [60, 33.5, 30.38477599620819, [15, 25, 274, 280]])]

('one_box: ', [132699, 80970.5, 2718.5739262104034, [1, 498, 67, 334]])

作者:沂水寒城,CSDN博客专家,个人研究方向:机器学习、深度学习、NLP、CV

Blog: http://yishuihancheng.blog.csdn.net

--End--

近期热门推荐? 2、用 Hypothesis 快速测试你的 Python 代码

关注公众号,回复“001” 领取Python入门+进阶+实战开发92天全套视频教程

点赞最大的支持

评论