Android FFmpeg + MediaCodec 实现视频硬解码

本文将利用 FFmpeg+ MediaCodec 做一个播放器,实现视频的硬解码和音视频同步等功能。

MediaCodec 介绍

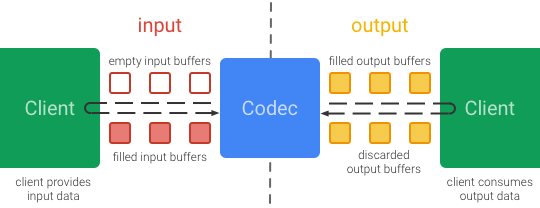

MediaCodec 是 Android 提供的用于对音视频进行编解码的类,它通过访问底层的 codec 来实现编解码的功能,是 Android media 基础框架的一部分,通常和 MediaExtractor, MediaSync, MediaMuxer, MediaCrypto, MediaDrm, Image, Surface和AudioTrack 一起使用。

详细描述可参见官方文档:https://developer.android.com/reference/android/media/MediaCodec.html

AMediaCodec 是 MediaCodec 的 native 接口,Google 从 Android 5.0 开始提供,Native 代码编译时需要引入 mediandk 库,官方 demo :

https://github.com/android/ndk-samples/tree/main/native-codec

FFmpeg + ANativeCodec

在 Android 没有在 Native 层开放 ModecCodec 接口之前,FFmpeg 实现硬解码需要将视频和音频数据拷贝到 Java 层,在 Java 层调用 MediaCodec (通过 JNI 调用 Java 对象方法)。

本文将实现 FFmpeg 和 AMediaCodec 结合使用, FFmpeg 负责解复用和音频解码,MediaCodec 负责视频解码并输出到 Surface(ANativeWindow)对象,其中解复用、音频解码、视频解码分别在一个子线程进行,利用队列管理音视频数据包。

注意:本 demo 处理的视频是 H.264 编码,由于 AVPacket data 不是标准的 NALU ,需要利用 av_bitstream_filter_filter 将 AVPacket 前 4 个字节替换为 0x00000001 得到标准的 NALU 数据,这样保证 MediaCodec 解码正确。

配置 AMediaCodec 对象,只解码视频流:

m_MediaExtractor = AMediaExtractor_new();

media_status_t err = AMediaExtractor_setDataSourceFd(m_MediaExtractor, fd,static_cast<off64_t>(outStart),static_cast<off64_t>(outLen));

close(fd);

if (err != AMEDIA_OK) {

result = -1;

LOGCATE("HWCodecPlayer::InitDecoder AMediaExtractor_setDataSourceFd fail. err=%d", err);

break;

}

int numTracks = AMediaExtractor_getTrackCount(m_MediaExtractor);

LOGCATE("HWCodecPlayer::InitDecoder AMediaExtractor_getTrackCount %d tracks", numTracks);

for (int i = 0; i < numTracks; i++) {

AMediaFormat *format = AMediaExtractor_getTrackFormat(m_MediaExtractor, i);

const char *s = AMediaFormat_toString(format);

LOGCATE("HWCodecPlayer::InitDecoder track %d format: %s", i, s);

const char *mime;

if (!AMediaFormat_getString(format, AMEDIAFORMAT_KEY_MIME, &mime)) {

LOGCATE("HWCodecPlayer::InitDecoder no mime type");

result = -1;

break;

} else if (!strncmp(mime, "video/", 6)) {

// Omitting most error handling for clarity.

// Production code should check for errors.

AMediaExtractor_selectTrack(m_MediaExtractor, i);

m_MediaCodec = AMediaCodec_createDecoderByType(mime);

AMediaCodec_configure(m_MediaCodec, format, m_ANativeWindow, NULL, 0);

AMediaCodec_start(m_MediaCodec);

}

AMediaFormat_delete(format);

}

FFmpeg 在一个线程中解复用,分别将音频和视频的编码数据包放入 2 个队列。

int HWCodecPlayer::DoMuxLoop() {

LOGCATE("HWCodecPlayer::DoMuxLoop start");

int result = 0;

AVPacket avPacket = {0};

for(;;) {

double passTimes = 0;

......

if(m_SeekPosition >= 0) { // seek 操作

//seek to frame

LOGCATE("HWCodecPlayer::DoMuxLoop seeking m_SeekPosition=%f", m_SeekPosition);

......

}

result = av_read_frame(m_AVFormatContext, &avPacket);

if(result >= 0) {

double bufferDuration = m_VideoPacketQueue->GetDuration() * av_q2d(m_VideoTimeBase);

LOGCATE("HWCodecPlayer::DoMuxLoop bufferDuration=%lfs", bufferDuration);

//防止缓冲数据包过多

while (BUFF_MAX_VIDEO_DURATION < bufferDuration && m_PlayerState == PLAYER_STATE_PLAYING && m_SeekPosition < 0) {

bufferDuration = m_VideoPacketQueue->GetDuration() * av_q2d(m_VideoTimeBase);

usleep(10 * 1000);

}

//音频和视频的编码数据包分别放入 2 个队列。

if(avPacket.stream_index == m_VideoStreamIdx) {

m_VideoPacketQueue->PushPacket(&avPacket);

} else if(avPacket.stream_index == m_AudioStreamIdx) {

m_AudioPacketQueue->PushPacket(&avPacket);

} else {

av_packet_unref(&avPacket);

}

} else {

//解复用结束,暂停解码器

std::unique_lock<std::mutex> lock(m_Mutex);

m_PlayerState = PLAYER_STATE_PAUSE;

}

}

LOGCATE("HWCodecPlayer::DoMuxLoop end");

return 0;

}

视频解码线程中,Native 使用 AMediaCodec 对视频进行解码,从视频的 AVPacket 队列中取包进行解码。

void HWCodecPlayer::VideoDecodeThreadProc(HWCodecPlayer *player) {

LOGCATE("HWCodecPlayer::VideoDecodeThreadProc start");

AVPacketQueue* videoPacketQueue = player->m_VideoPacketQueue;

AMediaCodec* videoCodec = player->m_MediaCodec;

AVPacket *packet = av_packet_alloc();

for(;;) {

....

ssize_t bufIdx = -1;

bufIdx = AMediaCodec_dequeueInputBuffer(videoCodec, 0);

if (bufIdx >= 0) {

size_t bufSize;

auto buf = AMediaCodec_getInputBuffer(videoCodec, bufIdx, &bufSize);

av_bitstream_filter_filter(player->m_Bsfc, player->m_VideoCodecCtx, NULL, &packet->data, &packet->size, packet->data, packet->size,

packet->flags & AV_PKT_FLAG_KEY);

LOGCATI("HWCodecPlayer::VideoDecodeThreadProc 0x%02X 0x%02X 0x%02X 0x%02X \n",packet->data[0],packet->data[1],packet->data[2],packet->data[3]);

memcpy(buf, packet->data, packet->size);

AMediaCodec_queueInputBuffer(videoCodec, bufIdx, 0, packet->size, packet->pts, 0);

}

av_packet_unref(packet);

AMediaCodecBufferInfo info;

auto status = AMediaCodec_dequeueOutputBuffer(videoCodec, &info, 1000);

LOGCATI("HWCodecPlayer::VideoDecodeThreadProc status: %d\n", status);

uint8_t* buffer;

if (status >= 0) {

SyncClock* videoClock = &player->m_VideoClock;

double presentationNano = info.presentationTimeUs * av_q2d(player->m_VideoTimeBase) * 1000;

videoClock->SetClock(presentationNano, GetSysCurrentTime());

player->AVSync();//音视频同步

size_t size;

LOGCATI("HWCodecPlayer::VideoDecodeThreadProc sync video curPts = %lf", presentationNano);

buffer = AMediaCodec_getOutputBuffer(videoCodec, status, &size);

LOGCATI("HWCodecPlayer::VideoDecodeThreadProc buffer: %p, buffer size: %d", buffer, size);

AMediaCodec_releaseOutputBuffer(videoCodec, status, info.size != 0);

} else if (status == AMEDIACODEC_INFO_OUTPUT_BUFFERS_CHANGED) {

LOGCATI("HWCodecPlayer::VideoDecodeThreadProc output buffers changed");

} else if (status == AMEDIACODEC_INFO_OUTPUT_FORMAT_CHANGED) {

LOGCATI("HWCodecPlayer::VideoDecodeThreadProc output format changed");

} else if (status == AMEDIACODEC_INFO_TRY_AGAIN_LATER) {

LOGCATI("HWCodecPlayer::VideoDecodeThreadProc no output buffer right now");

} else {

LOGCATI("HWCodecPlayer::VideoDecodeThreadProc unexpected info code: %zd", status);

}

if(isLocked) lock.unlock();

}

if(packet != nullptr) {

av_packet_free(&packet);

packet = nullptr;

}

LOGCATE("HWCodecPlayer::VideoDecodeThreadProc end");

}

视频向音频同步,delay 时间根据实际帧率与目标帧率的时间差进行微调。

void HWCodecPlayer::AVSync() {

LOGCATE("HWCodecPlayer::AVSync");

double delay = m_VideoClock.curPts - m_VideoClock.lastPts;

int tickFrame = 1000 * m_FrameRate.den / m_FrameRate.num;

LOGCATE("HWCodecPlayer::AVSync tickFrame=%dms", tickFrame);

if(delay <= 0 || delay > VIDEO_FRAME_MAX_DELAY) {

delay = tickFrame;

}

double refClock = m_AudioClock.GetClock();// 视频向音频同步

double avDiff = m_VideoClock.lastPts - refClock;

m_VideoClock.lastPts = m_VideoClock.curPts;

double syncThreshold = FFMAX(AV_SYNC_THRESHOLD_MIN, FFMIN(AV_SYNC_THRESHOLD_MAX, delay));

LOGCATE("HWCodecPlayer::AVSync refClock=%lf, delay=%lf, avDiff=%lf, syncThreshold=%lf", refClock, delay, avDiff, syncThreshold);

if(avDiff <= -syncThreshold) { //视频比音频慢

delay = FFMAX(0, delay + avDiff);

}

else if(avDiff >= syncThreshold && delay > AV_SYNC_FRAMEDUP_THRESHOLD) { //视频比音频快太多

delay = delay + avDiff;

}

else if(avDiff >= syncThreshold)

delay = 2 * delay;

LOGCATE("HWCodecPlayer::AVSync avDiff=%lf, delay=%lf", avDiff, delay);

double tickCur = GetSysCurrentTime();

double tickDiff = tickCur - m_VideoClock.frameTimer;//两帧实际的时间间隔

m_VideoClock.frameTimer = tickCur;

if(tickDiff - tickFrame > 5) delay-=5;//微调delay时间

if(tickDiff - tickFrame < -5) delay+=5;

LOGCATE("HWCodecPlayer::AVSync delay=%lf, tickDiff=%lf", delay, tickDiff);

if(delay > 0) {

usleep(1000 * delay);

}

}

实现代码路径:

https://github.com/githubhaohao/LearnFFmpeg

-- END --

最近掘金社区发起了一个创作者投票活动, 没想到自己的几十篇文章帮助了这么多人,后面会再接再励输出更多干货。

小伙伴!你愿意为我投上几票吗?(每人可投 7~28 票)

推荐: