关于跳跃连接你需要知道的一切

点击下方“AI算法与图像处理”,一起进步!

重磅干货,第一时间送达

目录

为什么需要跳跃连接? 什么是跳跃连接? 跳跃连接的变体 跳跃连接的实现

为什么要跳跃连接?

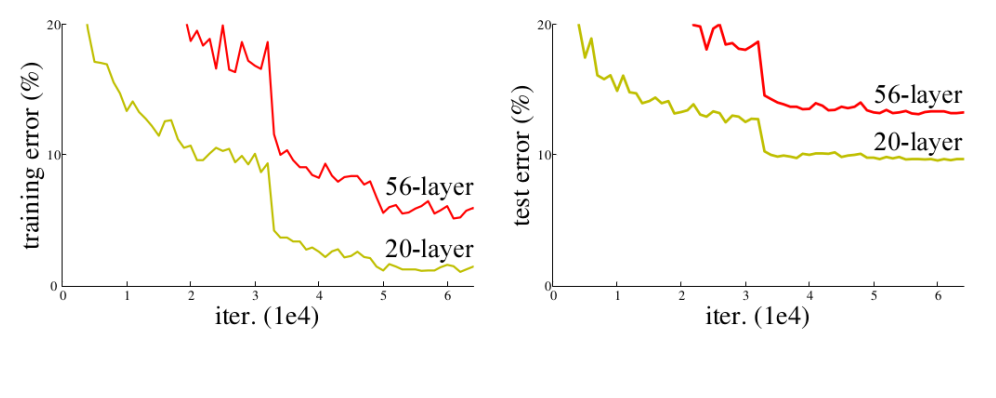

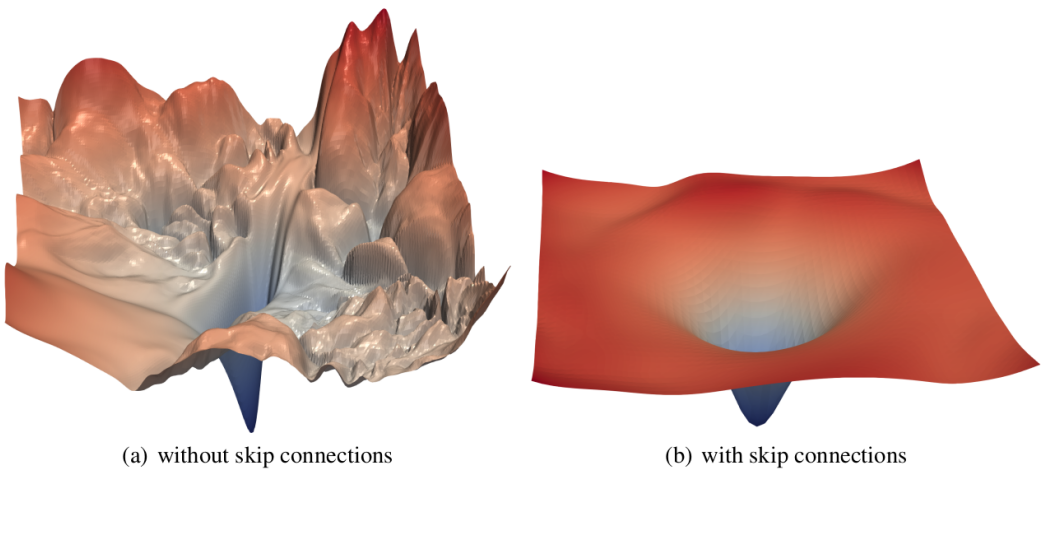

训练精度的下降表明并非所有系统都同样易于优化。

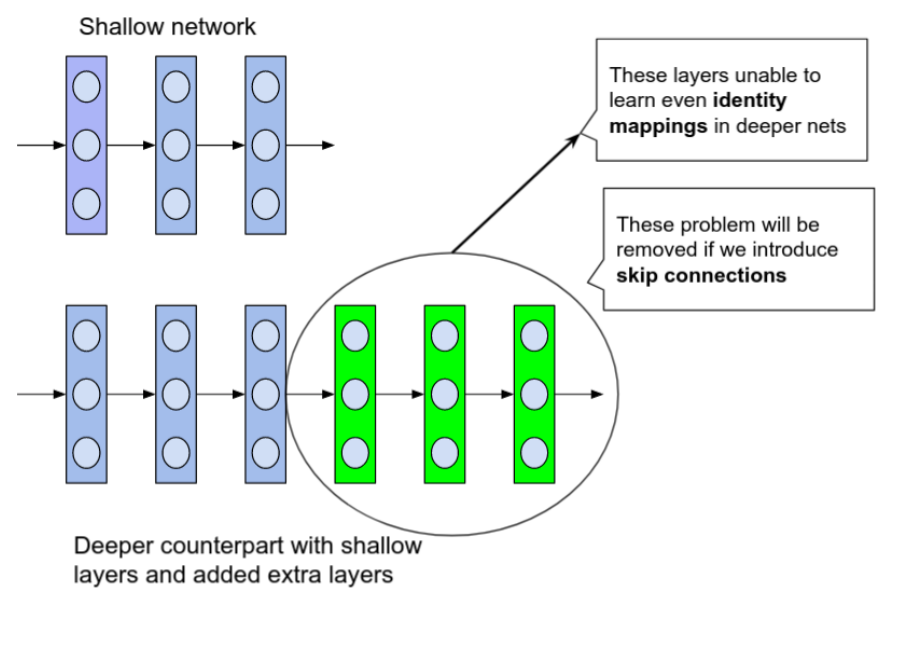

什么是跳跃连接?

顾名思义,Skip Connections(或 Shortcut Connections),跳跃连接,会跳跃神经网络中的某些层,并将一层的输出作为下一层的输入。

跳跃连接的变体

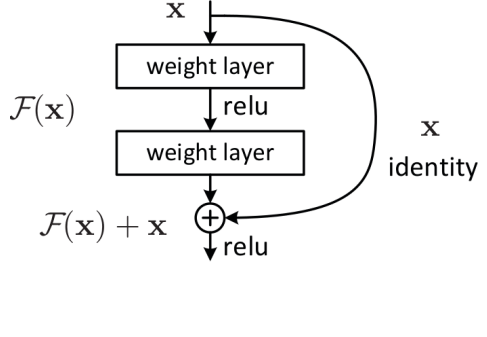

残差网络(ResNets)

了解 ResNet 并分析 CIFAR-10 数据集上的各种模型:https://www.analyticsvidhya.com/blog/2021/06/understanding-resnet-and-analyzing-various-models-on-the-cifar-10-dataset/

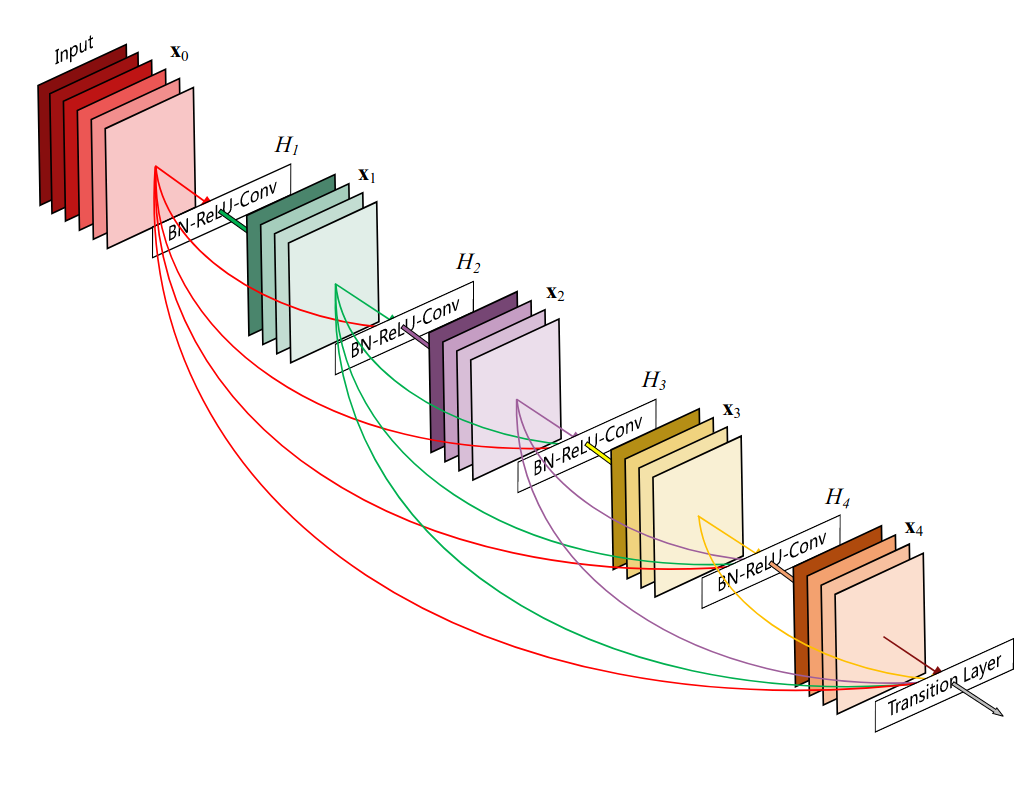

卷积网络 (DenseNets)

谈到跳跃连接,DenseNets 使用串联,而 ResNets 使用求和

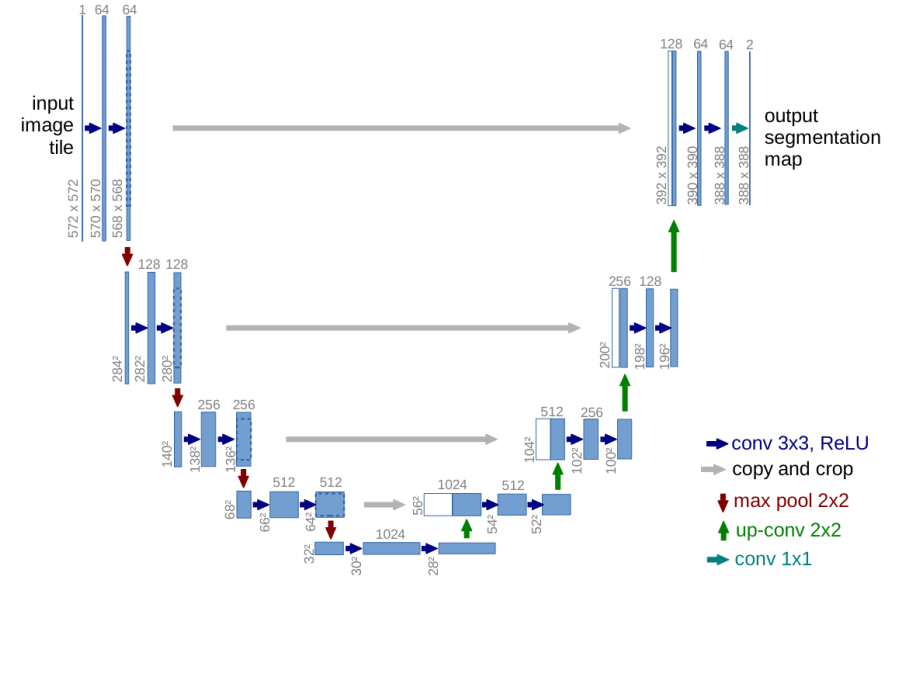

U-Net:用于生物医学图像分割的卷积网络

跳跃连接的实现

ResNet – 残差块

# import required libraries

import torch

from torch import nn

import torch.nn.functional as F

import torchvision

# basic resdidual block of ResNet

# This is generic in the sense, it could be used for downsampling of features.

class ResidualBlock(nn.Module):

def __init__(self, in_channels, out_channels, stride=[1, 1], downsample=None):

"""

A basic residual block of ResNet

Parameters

----------

in_channels: Number of channels that the input have

out_channels: Number of channels that the output have

stride: strides in convolutional layers

downsample: A callable to be applied before addition of residual mapping

"""

super(ResidualBlock, self).__init__()

self.conv1 = nn.Conv2d(

in_channels, out_channels, kernel_size=3, stride=stride[0],

padding=1, bias=False

)

self.conv2 = nn.Conv2d(

out_channels, out_channels, kernel_size=3, stride=stride[1],

padding=1, bias=False

)

self.bn = nn.BatchNorm2d(out_channels)

self.downsample = downsample

def forward(self, x):

residual = x

# applying a downsample function before adding it to the output

if(self.downsample is not None):

residual = downsample(residual)

out = F.relu(self.bn(self.conv1(x)))

out = self.bn(self.conv2(out))

# note that adding residual before activation

out = out + residual

out = F.relu(out)

return out

# downsample using 1 * 1 convolution

downsample = nn.Sequential(

nn.Conv2d(64, 128, kernel_size=1, stride=2, bias=False),

nn.BatchNorm2d(128)

)

# First five layers of ResNet34

resnet_blocks = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False),

nn.MaxPool2d(kernel_size=2, stride=2),

ResidualBlock(64, 64),

ResidualBlock(64, 64),

ResidualBlock(64, 128, stride=[2, 1], downsample=downsample)

)

# checking the shape

inputs = torch.rand(1, 3, 100, 100) # single 100 * 100 color image

outputs = resnet_blocks(inputs)

print(outputs.shape) # shape would be (1, 128, 13, 13)

# one could also use pretrained weights of ResNet trained on ImageNet

resnet34 = torchvision.models.resnet34(pretrained=True)

DenseNet – 残差块

实现一个 DenseNet 层 建立一个残差块 连接多个残差块得到一个残差网络模型

class Dense_Layer(nn.Module):

def __init__(self, in_channels, growthrate, bn_size):

super(Dense_Layer, self).__init__()

self.bn1 = nn.BatchNorm2d(in_channels)

self.conv1 = nn.Conv2d(

in_channels, bn_size * growthrate, kernel_size=1, bias=False

)

self.bn2 = nn.BatchNorm2d(bn_size * growthrate)

self.conv2 = nn.Conv2d(

bn_size * growthrate, growthrate, kernel_size=3, padding=1, bias=False

)

def forward(self, prev_features):

out1 = torch.cat(prev_features, dim=1)

out1 = self.conv1(F.relu(self.bn1(out1)))

out2 = self.conv2(F.relu(self.bn2(out1)))

return out2

class Dense_Block(nn.ModuleDict):

def __init__(self, n_layers, in_channels, growthrate, bn_size):

"""

A Dense block consists of `n_layers` of `Dense_Layer`

Parameters

----------

n_layers: Number of dense layers to be stacked

in_channels: Number of input channels for first layer in the block

growthrate: Growth rate (k) as mentioned in DenseNet paper

bn_size: Multiplicative factor for # of bottleneck layers

"""

super(Dense_Block, self).__init__()

layers = dict()

for i in range(n_layers):

layer = Dense_Layer(in_channels + i * growthrate, growthrate, bn_size)

layers['dense{}'.format(i)] = layer

self.block = nn.ModuleDict(layers)

def forward(self, features):

if(isinstance(features, torch.Tensor)):

features = [features]

for _, layer in self.block.items():

new_features = layer(features)

features.append(new_features)

return torch.cat(features, dim=1)

# a block consists of initial conv layers followed by 6 dense layers

dense_block = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=7, padding=3, stride=2, bias=False),

nn.BatchNorm2d(64),

nn.MaxPool2d(3, 2),

Dense_Block(6, 64, growthrate=32, bn_size=4),

)

inputs = torch.rand(1, 3, 100, 100)

outputs = dense_block(inputs)

print(outputs.shape) # shape would be (1, 256, 24, 24)

# one could also use pretrained weights of DenseNet trained on ImageNet

densenet121 = torchvision.models.densenet121(pretrained=True)

尾注

交流群

欢迎加入公众号读者群一起和同行交流,目前有美颜、三维视觉、计算摄影、检测、分割、识别、医学影像、GAN、算法竞赛等微信群

个人微信(如果没有备注不拉群!) 请注明:地区+学校/企业+研究方向+昵称

下载1:何恺明顶会分享

在「AI算法与图像处理」公众号后台回复:何恺明,即可下载。总共有6份PDF,涉及 ResNet、Mask RCNN等经典工作的总结分析

下载2:终身受益的编程指南:Google编程风格指南

在「AI算法与图像处理」公众号后台回复:c++,即可下载。历经十年考验,最权威的编程规范!

下载3 CVPR2021 在「AI算法与图像处理」公众号后台回复:CVPR,即可下载1467篇CVPR 2020论文 和 CVPR 2021 最新论文

评论