MapReduce源码解析之ReduceTask

ReduceTask run

与MapTask中的写法呼应的比较多,因此看过MapTask之后,对ReduceTask理解可能会更加迅速。

public void run(JobConf job, final TaskUmbilicalProtocol umbilical)

throws IOException, InterruptedException, ClassNotFoundException {

job.setBoolean(JobContext.SKIP_RECORDS, isSkipping());

if (isMapOrReduce()) {

copyPhase = getProgress().addPhase("copy");

sortPhase = getProgress().addPhase("sort");

reducePhase = getProgress().addPhase("reduce");

}

// start thread that will handle communication with parent

TaskReporter reporter = startReporter(umbilical);

boolean useNewApi = job.getUseNewReducer();

initialize(job, getJobID(), reporter, useNewApi);

// check if it is a cleanupJobTask

if (jobCleanup) {

runJobCleanupTask(umbilical, reporter);

return;

}

if (jobSetup) {

runJobSetupTask(umbilical, reporter);

return;

}

if (taskCleanup) {

runTaskCleanupTask(umbilical, reporter);

return;

}

// Initialize the codec

codec = initCodec();

RawKeyValueIterator rIter = null;

ShuffleConsumerPlugin shuffleConsumerPlugin = null;

Class combinerClass = conf.getCombinerClass();

CombineOutputCollector combineCollector =

(null != combinerClass) ?

new CombineOutputCollector(reduceCombineOutputCounter, reporter, conf) : null;

Class extends ShuffleConsumerPlugin> clazz =

job.getClass(MRConfig.SHUFFLE_CONSUMER_PLUGIN, Shuffle.class, ShuffleConsumerPlugin.class);

shuffleConsumerPlugin = ReflectionUtils.newInstance(clazz, job);

LOG.info("Using ShuffleConsumerPlugin: " + shuffleConsumerPlugin);

ShuffleConsumerPlugin.Context shuffleContext =

new ShuffleConsumerPlugin.Context(getTaskID(), job, FileSystem.getLocal(job), umbilical,

super.lDirAlloc, reporter, codec,

combinerClass, combineCollector,

spilledRecordsCounter, reduceCombineInputCounter,

shuffledMapsCounter,

reduceShuffleBytes, failedShuffleCounter,

mergedMapOutputsCounter,

taskStatus, copyPhase, sortPhase, this,

mapOutputFile, localMapFiles);

shuffleConsumerPlugin.init(shuffleContext);

rIter = shuffleConsumerPlugin.run();

// free up the data structures

mapOutputFilesOnDisk.clear();

sortPhase.complete(); // sort is complete

setPhase(TaskStatus.Phase.REDUCE);

statusUpdate(umbilical);

Class keyClass = job.getMapOutputKeyClass();

Class valueClass = job.getMapOutputValueClass();

RawComparator comparator = job.getOutputValueGroupingComparator();

if (useNewApi) {

runNewReducer(job, umbilical, reporter, rIter, comparator,

keyClass, valueClass);

} else {

runOldReducer(job, umbilical, reporter, rIter, comparator,

keyClass, valueClass);

}

shuffleConsumerPlugin.close();

done(umbilical, reporter);

}shuffle洗牌

reduce过程第一步,就是洗牌,相同的key被拉取到一个分区,而且始从不同计算节点分布式拉取。

迭代器

洗牌之后或许到一个迭代器,为何要使用迭代器模式,因为大数据量的计算,可能数据会超越我们的内存空间。所以我们不可能将数据加载到内存中进行遍历处理,而是需要在内存中放置一个迭代器,然后去逐行读取我们磁盘上的文件。

rIter = shuffleConsumerPlugin.run();

排序

reduce中的排序是归并排序,此时需要我们有点排序基础常识。因为之前的map已经帮助我们把每个小文件排好了顺序,只是每个小文件是乱放的,但是他们的分区对应着自己的reduce。然后我们将小文件进行归并排序,就会加快排序的速度。这个是归并排序的特点。

分组比较器

与map中的排序比较器规则不同(返回 -1,0,1 分别代表 小于,等于,大于)

reduce中采用分组比较器,让需求的key排序到一块(返回 是不是一组,即 true or false)。

此处的比较器我们也可以自定义,也可以用系统默认比较器。最终会有多种map排序比较器和reduce分组比较器的组合。默认情况下,如果我们都不指定,则都会选择key自身的排序比较器。

public RawComparator getOutputValueGroupingComparator() {

Class extends RawComparator> theClass = getClass(

JobContext.GROUP_COMPARATOR_CLASS, null, RawComparator.class);

if (theClass == null) {

return getOutputKeyComparator();

}

return ReflectionUtils.newInstance(theClass, this);

}Reduce的Run

private <INKEY,INVALUE,OUTKEY,OUTVALUE>

void runNewReducer(JobConf job,

final TaskUmbilicalProtocol umbilical,

final TaskReporter reporter,

RawKeyValueIterator rIter,

RawComparator<INKEY> comparator,

Class<INKEY> keyClass,

Class<INVALUE> valueClass

) throws IOException,InterruptedException,

ClassNotFoundException {

// wrap value iterator to report progress.

final RawKeyValueIterator rawIter = rIter;

rIter = new RawKeyValueIterator() {

public void close() throws IOException {

rawIter.close();

}

public DataInputBuffer getKey() throws IOException {

return rawIter.getKey();

}

public Progress getProgress() {

return rawIter.getProgress();

}

public DataInputBuffer getValue() throws IOException {

return rawIter.getValue();

}

public boolean next() throws IOException {

boolean ret = rawIter.next();

reporter.setProgress(rawIter.getProgress().getProgress());

return ret;

}

};

// make a task context so we can get the classes

org.apache.hadoop.mapreduce.TaskAttemptContext taskContext =

new org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl(job,

getTaskID(), reporter);

// make a reducer

org.apache.hadoop.mapreduce.Reducer<INKEY,INVALUE,OUTKEY,OUTVALUE> reducer =

(org.apache.hadoop.mapreduce.Reducer<INKEY,INVALUE,OUTKEY,OUTVALUE>)

ReflectionUtils.newInstance(taskContext.getReducerClass(), job);

org.apache.hadoop.mapreduce.RecordWriter<OUTKEY,OUTVALUE> trackedRW =

new NewTrackingRecordWriter<OUTKEY, OUTVALUE>(this, taskContext);

job.setBoolean("mapred.skip.on", isSkipping());

job.setBoolean(JobContext.SKIP_RECORDS, isSkipping());

org.apache.hadoop.mapreduce.Reducer.Context

reducerContext = createReduceContext(reducer, job, getTaskID(),

rIter, reduceInputKeyCounter,

reduceInputValueCounter,

trackedRW,

committer,

reporter, comparator, keyClass,

valueClass);

try {

reducer.run(reducerContext);

} finally {

trackedRW.close(reducerContext);

}

}创建上下文

protected static <INKEY,INVALUE,OUTKEY,OUTVALUE>

org.apache.hadoop.mapreduce.Reducer<INKEY,INVALUE,OUTKEY,OUTVALUE>.Context

createReduceContext(org.apache.hadoop.mapreduce.Reducer

<INKEY,INVALUE,OUTKEY,OUTVALUE> reducer,

Configuration job,

org.apache.hadoop.mapreduce.TaskAttemptID taskId,

RawKeyValueIterator rIter,

org.apache.hadoop.mapreduce.Counter inputKeyCounter,

org.apache.hadoop.mapreduce.Counter inputValueCounter,

org.apache.hadoop.mapreduce.RecordWriter<OUTKEY,OUTVALUE> output,

org.apache.hadoop.mapreduce.OutputCommitter committer,

org.apache.hadoop.mapreduce.StatusReporter reporter,

RawComparator<INKEY> comparator,

Class<INKEY> keyClass, Class<INVALUE> valueClass

) throws IOException, InterruptedException {

org.apache.hadoop.mapreduce.ReduceContext<INKEY, INVALUE, OUTKEY, OUTVALUE>

reduceContext =

new ReduceContextImpl<INKEY, INVALUE, OUTKEY, OUTVALUE>(job, taskId,

rIter,

inputKeyCounter,

inputValueCounter,

output,

committer,

reporter,

comparator,

keyClass,

valueClass);

org.apache.hadoop.mapreduce.Reducer<INKEY,INVALUE,OUTKEY,OUTVALUE>.Context

reducerContext =

new WrappedReducer<INKEY, INVALUE, OUTKEY, OUTVALUE>().getReducerContext(

reduceContext);

return reducerContext;

}迭代器赋值input

将迭代器作为输入赋值给对象的input属性。

public ReduceContextImpl(Configuration conf, TaskAttemptID taskid,

RawKeyValueIterator input,

Counter inputKeyCounter,

Counter inputValueCounter,

RecordWriter<KEYOUT,VALUEOUT> output,

OutputCommitter committer,

StatusReporter reporter,

RawComparator<KEYIN> comparator,

Class<KEYIN> keyClass,

Class<VALUEIN> valueClass

) throws InterruptedException, IOException{

super(conf, taskid, output, committer, reporter);

this.input = input;

this.inputKeyCounter = inputKeyCounter;

this.inputValueCounter = inputValueCounter;

this.comparator = comparator;

this.serializationFactory = new SerializationFactory(conf);

this.keyDeserializer = serializationFactory.getDeserializer(keyClass);

this.keyDeserializer.open(buffer);

this.valueDeserializer = serializationFactory.getDeserializer(valueClass);

this.valueDeserializer.open(buffer);

hasMore = input.next();

this.keyClass = keyClass;

this.valueClass = valueClass;

this.conf = conf;

this.taskid = taskid;

}调用ReduceRun

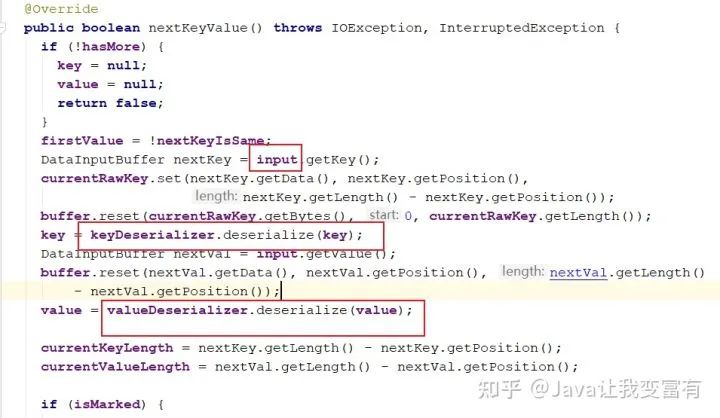

根据map的经验,Reduce中的run方法,netKey一定使用的我们传入的迭代器实例方法。同时还会进行反序列化读出。并且接着会多取一条记录,判断下一条是否是一组。

/**

* Advance to the next key/value pair.

*/

@Override

public boolean nextKeyValue() throws IOException, InterruptedException {

if (!hasMore) {

key = null;

value = null;

return false;

}

firstValue = !nextKeyIsSame;

DataInputBuffer nextKey = input.getKey();

currentRawKey.set(nextKey.getData(), nextKey.getPosition(),

nextKey.getLength() - nextKey.getPosition());

buffer.reset(currentRawKey.getBytes(), 0, currentRawKey.getLength());

key = keyDeserializer.deserialize(key);

DataInputBuffer nextVal = input.getValue();

buffer.reset(nextVal.getData(), nextVal.getPosition(), nextVal.getLength()

- nextVal.getPosition());

value = valueDeserializer.deserialize(value);

currentKeyLength = nextKey.getLength() - nextKey.getPosition();

currentValueLength = nextVal.getLength() - nextVal.getPosition();

if (isMarked) {

backupStore.write(nextKey, nextVal);

}

hasMore = input.next();

if (hasMore) {

nextKey = input.getKey();

nextKeyIsSame = comparator.compare(currentRawKey.getBytes(), 0,

currentRawKey.getLength(),

nextKey.getData(),

nextKey.getPosition(),

nextKey.getLength() - nextKey.getPosition()

) == 0;

} else {

nextKeyIsSame = false;

}

inputValueCounter.increment(1);

return true;

}嵌套迭代器

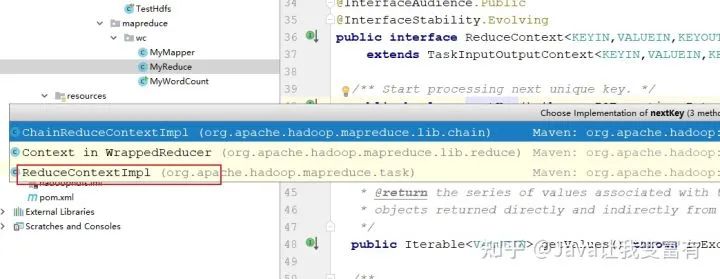

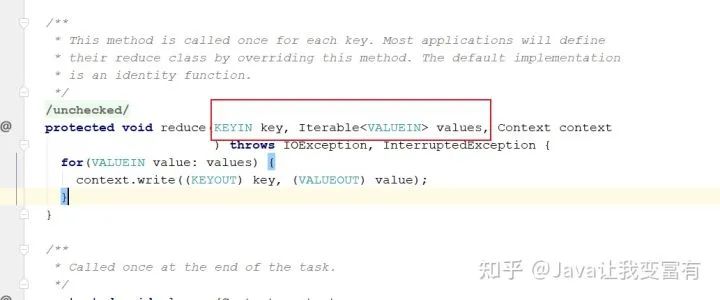

我们在run方法中发现,传入的key是我们当前迭代的key,而传入的value则又是一个迭代器。因为reduce是按照一组来计算的,不像是map按照一个记录一个记录来读写。所以如果一组的key value数据量过大,reduce数量过多,那么内存肯定很容易就崩溃了。所以我们此处还是采用了另一个迭代器,来专门针对一组进行迭代。同时利用多读一条的方式,来判断当前迭代器是否还能够继续向下遍历。

public void run(Context context) throws IOException, InterruptedException {

setup(context);

try {

while (context.nextKey()) {

reduce(context.getCurrentKey(), context.getValues(), context);

// If a back up store is used, reset it

Iterator<VALUEIN> iter = context.getValues().iterator();

if(iter instanceof ReduceContext.ValueIterator) {

((ReduceContext.ValueIterator<VALUEIN>)iter).resetBackupStore();

}

}

} finally {

cleanup(context);

}

}

hasMore = input.next();

if (hasMore) {

nextKey = input.getKey();

nextKeyIsSame = comparator.compare(currentRawKey.getBytes(), 0,

currentRawKey.getLength(),

nextKey.getData(),

nextKey.getPosition(),

nextKey.getLength() - nextKey.getPosition()

) == 0;

} else {

nextKeyIsSame = false;

}

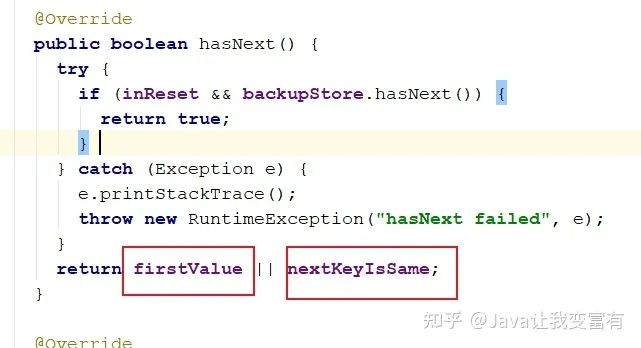

public boolean hasNext() {

try {

if (inReset && backupStore.hasNext()) {

return true;

}

} catch (Exception e) {

e.printStackTrace();

throw new RuntimeException("hasNext failed", e);

}

return firstValue || nextKeyIsSame;

}