一文看懂|Linux内核反向映射机制原理

当内核需要对申请的page进行回收时,在回收页表前需要解除该page的映射关系,即内核需要知道这个物理页被映射到了哪些进程虚拟地址空间,因此就有了反向映射机制。反向映射一般分为匿名页映射和文件页映射,本文先介绍匿名页反向映射。

基本数据结构

page结构体中涉及反向映射的相关成员

struct page {

。。。

struct address_space *mapping; /* If low bit clear, points to

* inode address_space, or NULL.

* If page mapped as anonymous

* memory, low bit is set, and

* it points to anon_vma object:

* see PAGE_MAPPING_ANON below.

*/

。。。

/* page_deferred_list().next -- second tail page */

};

/* Second double word */

union {

pgoff_t index; /* Our offset within mapping. */

。。。

union {

atomic_t _mapcount; mapping

因为指针变量是4个字节,因此可以用最后两位来区分不同的映射。对于匿名映射,最低位为PAGE_MAPPING_ANON,指向anon_vma结构体,每个匿名页对应唯一的anon_vma;对于文件映射而言,指向address_space结构体。index

表示页偏移,对于匿名映射,index表示page在vm_areat_struct指定的虚拟内存区域中的页偏移;对于匿名映射,index表示物理页中的数据在文件中的页偏移。_mapcount

记录该page被映射到了多少个vm_struct虚拟内存区域。注意和mm_struct结构体中的map_count做区分,map_count表示mm_strcut中有多少个vm_struct区域。

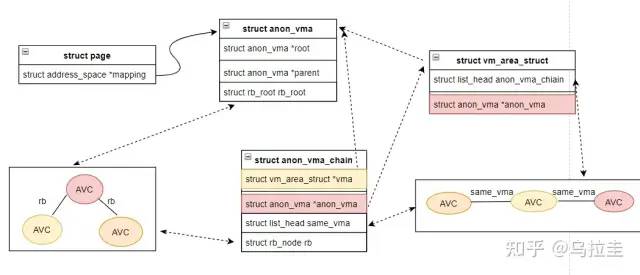

一般struct anon_vma称为AV,struct anon_vma_chain称为AVC,struct vm_area_struct称为VMA,page找到VMA的路径一般如下:page->AV->AVC->VMA,其中AVC起到桥梁作用,至于为何需要AVC,主要考虑当父进程和多个子进程同时拥有共同的page时的查询效率,具体对比2.6版本时的实现方式。

struct anon_vma

struct anon_vma {

struct anon_vma *root; /* Root of this anon_vma tree */

struct rw_semaphore rwsem; /* W: modification, R: walking the list */

/*

* The refcount is taken on an anon_vma when there is no

* guarantee that the vma of page tables will exist for

* the duration of the operation. A caller that takes

* the reference is responsible for clearing up the

* anon_vma if they are the last user on release

*/

atomic_t refcount;

/*

* Count of child anon_vmas and VMAs which points to this anon_vma.

*

* This counter is used for making decision about reusing anon_vma

* instead of forking new one. See comments in function anon_vma_clone.

*/

unsigned degree;

struct anon_vma *parent; /* Parent of this anon_vma */

/*

* NOTE: the LSB of the rb_root.rb_node is set by

* mm_take_all_locks() _after_ taking the above lock. So the

* rb_root must only be read/written after taking the above lock

* to be sure to see a valid next pointer. The LSB bit itself

* is serialized by a system wide lock only visible to

* mm_take_all_locks() (mm_all_locks_mutex).

*/

struct rb_root rb_root; /* Interval tree of private "related" vmas */

};struct anon_vma_chain

struct anon_vma_chain {

struct vm_area_struct *vma;

struct anon_vma *anon_vma;

struct list_head same_vma; /* locked by mmap_sem & page_table_lock */

struct rb_node rb; /* locked by anon_vma->rwsem */

unsigned long rb_subtree_last;

#ifdef CONFIG_DEBUG_VM_RB

unsigned long cached_vma_start, cached_vma_last;

#endif

};struct vm_struct中相关成员

struct vm_area_struct {

。。。

struct list_head anon_vma_chain; /* Serialized by mmap_sem &

* page_table_lock */

struct anon_vma *anon_vma; /* Serialized by page_table_lock */

。。。

}上面几个结构体的关系大致如下:

page通过mapping找到VMA,VMA 遍历自己管理的红黑树rb_root,找到树上的每个节点AVC,AVC通过成员指针anon_vma找到对应的VMA,这个过程就完成了页表映射查找。需要注意的几点:

1.VMA中也有链表anon_vma_chain管理各个AVC,这里主要用在父子进程之间的管理,下文会详细介绍。

2.VMA中有成员指针成员anon_vma,同时AVC中也有成员指针anon_vma,VAC起到桥梁作用所以可以指向VMA和AVC,那VMA中为何又需要指向AV呢?进程创建的流程中一般都是新建AV,然后创建AVC及AMV,然后调用anon_vma_chain_link建立三者之间的关系,但是当一个VMA没有对应页的时候,此时触发pagefault,这里可以快速判断VMA有没有对应的page。

常用接口

anon_vma_chain_link

1.将VAC中的vma和anon_vma分别指向VMA和AV;

2.将AVC加入到VMA的anon_vma_chain链表上;

3.将AVC加入到AV的rb_root红黑树上,通常都是通过遍历这个红黑树找到所有的AVC;

static void anon_vma_chain_link(struct vm_area_struct *vma,

struct anon_vma_chain *avc,

struct anon_vma *anon_vma)

{

avc->vma = vma;

avc->anon_vma = anon_vma;

list_add(&avc->same_vma, &vma->anon_vma_chain);

anon_vma_interval_tree_insert(avc, &anon_vma->rb_root);

}代码实现

反向映射跟父子进程的写时拷贝有关系,所以先从父子进程创建时对AV,AVC,VMA的创建开始讲。

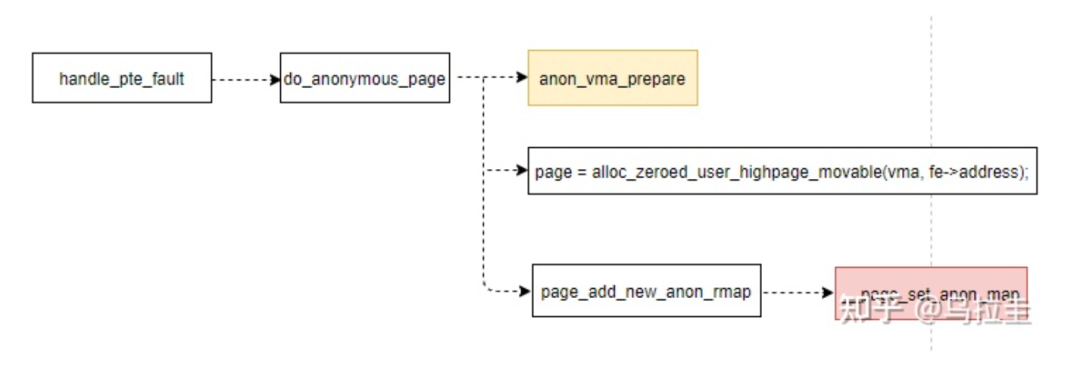

1.父进程创建匿名页面

当触发pagefault的时候走到handle_pte_fault中,anon_vma_prepare中负责创建AVC和AV并建立彼此的关系;真正将创建的page与av关联在__page_set_anon_map中完成。这样的话父进程新建的page在自己的反向映射中的关系就算完成了。

int anon_vma_prepare(struct vm_area_struct *vma)

{

struct anon_vma *anon_vma = vma->anon_vma;

struct anon_vma_chain *avc;

。。。

if (unlikely(!anon_vma)) {

struct mm_struct *mm = vma->vm_mm;

struct anon_vma *allocated;

avc = anon_vma_chain_alloc(GFP_KERNEL);

。。。

anon_vma = find_mergeable_anon_vma(vma);

allocated = NULL;

if (!anon_vma) {

anon_vma = anon_vma_alloc();

。。。

allocated = anon_vma;

}

anon_vma_lock_write(anon_vma);

/* page_table_lock to protect against threads */

spin_lock(&mm->page_table_lock);

if (likely(!vma->anon_vma)) {

vma->anon_vma = anon_vma;

anon_vma_chain_link(vma, avc, anon_vma);

/* vma reference or self-parent link for new root */

anon_vma->degree++;

allocated = NULL;

avc = NULL;

}

spin_unlock(&mm->page_table_lock);

anon_vma_unlock_write(anon_vma);

。。。

}

return 0;

。。。

}

static void __page_set_anon_rmap(struct page *page,

struct vm_area_struct *vma, unsigned long address, int exclusive)

{

struct anon_vma *anon_vma = vma->anon_vma;

。。。

anon_vma = (void *) anon_vma + PAGE_MAPPING_ANON;

page->mapping = (struct address_space *) anon_vma;

page->index = linear_page_index(vma, address);

}至于index的含义看linear_page_index的实现应该就明白了。

static inline pgoff_t linear_page_index(struct vm_area_struct *vma,

unsigned long address)

{

pgoff_t pgoff;

if (unlikely(is_vm_hugetlb_page(vma)))

return linear_hugepage_index(vma, address);

pgoff = (address - vma->vm_start) >> PAGE_SHIFT;

pgoff += vma->vm_pgoff;

return pgoff;

}2.父进程创建子进程

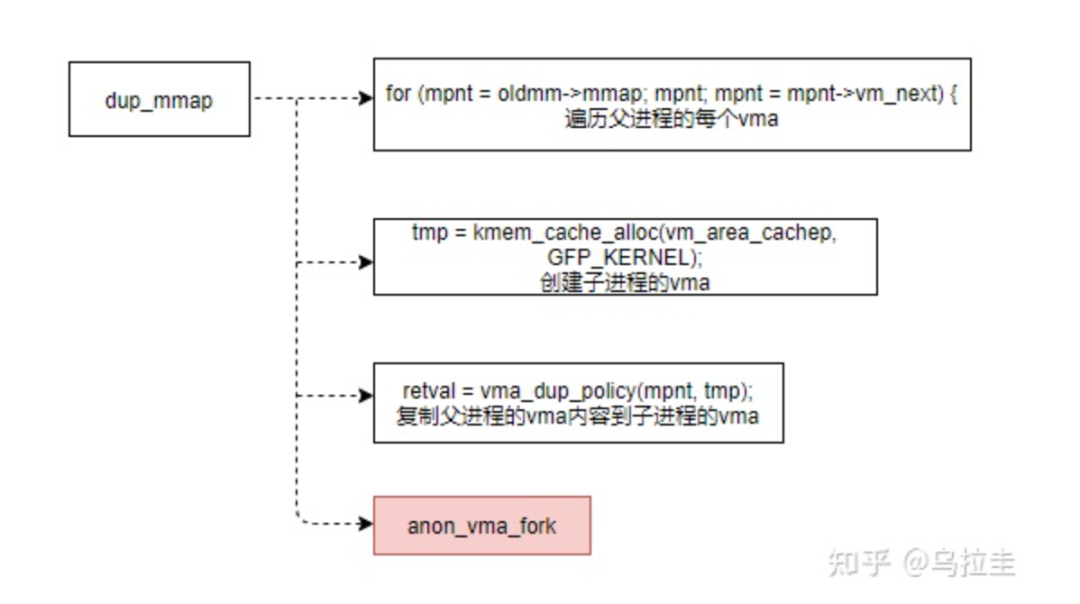

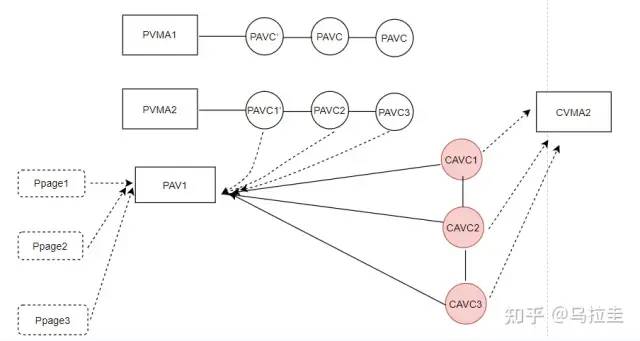

当父进程创建子进程的时候,子进程会复制父进程的VMA作为自己的进程地址空间,并且父子进程共享相同的page,知道子进程往自己的地址空间写数据,这就是所谓的COW。这种情况需要完成两件事情:1.子进程需要继承父进程的AVC,AV,VMA及三者之间的关系;2.创建自己的AV,AVC,VMA。

以上实现流程在dup_mm->dup_mmap->anon_vma_fork中完成。

dup_mmap中就是组个创建子进程的vma,并复制父进程对应vma的信息

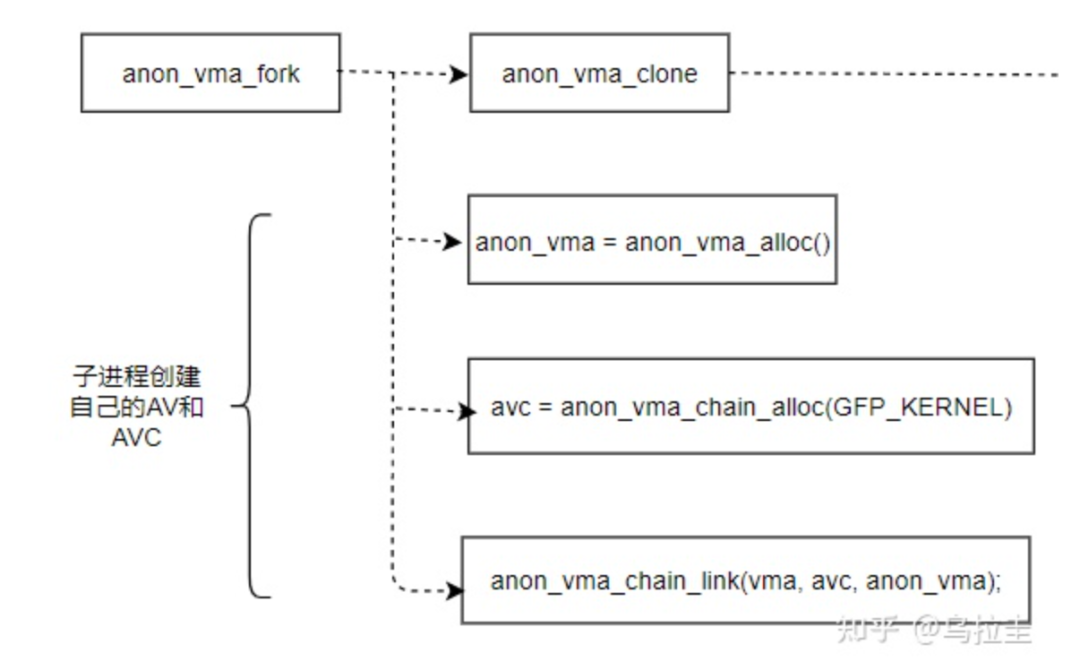

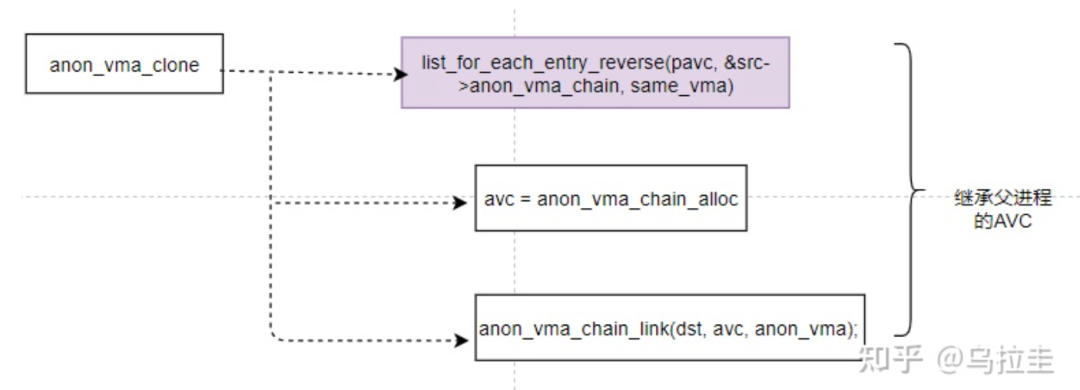

anon_vma_clone中新建了AVC,将子进程的VMA关联到父进程的AV中,所以父进程AV的rb树上就有了子进程的AVC,通过遍历父进程AV的rb树就能找到子进程的VMA。一个VMA可以包含多个page,但是该区域内的所有page只需要一个AV来反向映射即可。

具体anon_vma_clone代码如下

int anon_vma_clone(struct vm_area_struct *dst, struct vm_area_struct *src)

{

struct anon_vma_chain *avc, *pavc;

struct anon_vma *root = NULL;

list_for_each_entry_reverse(pavc, &src->anon_vma_chain, same_vma) {

struct anon_vma *anon_vma;

avc = anon_vma_chain_alloc(GFP_NOWAIT | __GFP_NOWARN);

if (unlikely(!avc)) {

unlock_anon_vma_root(root);

root = NULL;

avc = anon_vma_chain_alloc(GFP_KERNEL);

if (!avc)

goto enomem_failure;

}

anon_vma = pavc->anon_vma;

root = lock_anon_vma_root(root, anon_vma);

anon_vma_chain_link(dst, avc, anon_vma);

/*

* Reuse existing anon_vma if its degree lower than two,

* that means it has no vma and only one anon_vma child.

*

* Do not chose parent anon_vma, otherwise first child

* will always reuse it. Root anon_vma is never reused:

* it has self-parent reference and at least one child.

*/

if (!dst->anon_vma && anon_vma != src->anon_vma &&

anon_vma->degree < 2)

dst->anon_vma = anon_vma;

}

if (dst->anon_vma)

dst->anon_vma->degree++;

unlock_anon_vma_root(root);

return 0;3.子进程发生cow,创建自己的匿名页面

当新创建的子进程写数据时触发pagefault,在wp_page_copy中会创建新的page,此时创建的AV和AVC管理子进程自己的VMA

if (fe->flags & FAULT_FLAG_WRITE) {

if (!pte_write(entry))

return do_wp_page(fe, entry);

entry = pte_mkdirty(entry);

}4.页面回收,解除映射

物理页回收时通过调用try_to_unmap解除一个page的页表映射。对于匿名页面解除映射而言,走

try_to_unmap->rmap_walk->rmap_walk_anon流程。

int rmap_walk(struct page *page, struct rmap_walk_control *rwc)

{

if (unlikely(PageKsm(page)))

return rmap_walk_ksm(page, rwc);

else if (PageAnon(page))

return rmap_walk_anon(page, rwc, false);

else

return rmap_walk_file(page, rwc, false);

}

static int rmap_walk_anon(struct page *page, struct rmap_walk_control *rwc,

bool locked)

{

struct anon_vma *anon_vma;

pgoff_t pgoff;

struct anon_vma_chain *avc;

int ret = SWAP_AGAIN;

if (locked) {

anon_vma = page_anon_vma(page);

/* anon_vma disappear under us? */

VM_BUG_ON_PAGE(!anon_vma, page);

} else {

anon_vma = rmap_walk_anon_lock(page, rwc);

}

。。。

pgoff = page_to_pgoff(page);

// 遍历AV的红黑树,找到所有的AVC

anon_vma_interval_tree_foreach(avc, &anon_vma->rb_root, pgoff, pgoff) {

// 通过AVC找到VMA

struct vm_area_struct *vma = avc->vma;

// address为该page对应的起始地址

unsigned long address = vma_address(page, vma);

。。。

ret = rwc->rmap_one(page, vma, address, rwc->arg);

。。。

}rmap_one指向try_to_umap_one,该函数内容比较复杂,这里只截取了页表项解除的操作。

static int try_to_unmap_one(struct page *page, struct vm_area_struct *vma,

unsigned long address, void *arg)

{

struct mm_struct *mm = vma->vm_mm;

pte_t *pte;

pte_t pteval;

spinlock_t *ptl;

int ret = SWAP_AGAIN;

struct rmap_private *rp = arg;

enum ttu_flags flags = rp->flags;

pte = page_check_address(page, mm, address, &ptl,

PageTransCompound(page));

。。。

/* Nuke the page table entry. */

flush_cache_page(vma, address, page_to_pfn(page));

if (should_defer_flush(mm, flags)) {

/*

* We clear the PTE but do not flush so potentially a remote

* CPU could still be writing to the page. If the entry was

* previously clean then the architecture must guarantee that

* a clear->dirty transition on a cached TLB entry is written

* through and traps if the PTE is unmapped.

*/

pteval = ptep_get_and_clear(mm, address, pte);

set_tlb_ubc_flush_pending(mm, page, pte_dirty(pteval));

} else {

pteval = ptep_clear_flush(vma, address, pte);

}

。。。

}参考

《深入理解linux内核》

linux kernel4.9

原文:https://zhuanlan.zhihu.com/p/361173109