手部21个关键点检测+手势识别-[MediaPipe]

共 14622字,需浏览 30分钟

·

2022-02-28 03:02

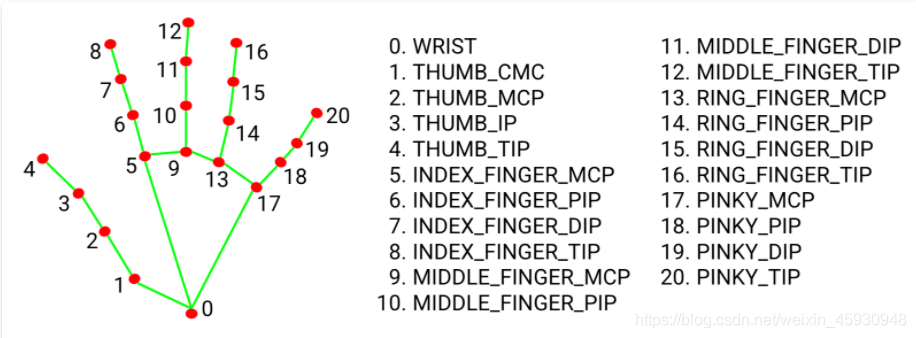

#MediaPipe 是一款由 Google Research 开发并开源的多媒体机器学习模型应用框架,可以直接调用其API完成目标检测、人脸检测以及关键点检测等。本篇文章介绍其手部21个关键点检测(win10,python版)

安装mediapipe

pip install mediapipe

创建手部检测模型

import cv2

import mediapipe as mp

mp_drawing = mp.solutions.drawing_utils

mp_hands = mp.solutions.hands

hands = mp_hands.Hands(

static_image_mode=True,

max_num_hands=2,

min_detection_confidence=0.75,

min_tracking_confidence=0.5)

hands = mp_hands.Hands(

static_image_mode=False,

max_num_hands=2,

min_detection_confidence=0.75,

min_tracking_confidence=0.5)

hands是检测手部关键点的函数,其中有4个输入参数量可以选择

1、static_image_mode:默认为False,如果设置为false, 就是把输入看作一个视频流,在检测到手之后对手加了一个目标跟踪(目标检测+跟踪),无需调用另一次检测,直到失去对任何手的跟踪为止。如果设置为True,则手部检测将在每个输入图像上运行(目标检测),非常适合处理一批静态的,可能不相关的图像。(如果检测的是图片就要设置成True)

2、max_num_hands:可以检测到的手的数量最大值,默认是2

3、min_detection_confidence: 手部检测的最小置信度值,大于这个数值被认为是成功的检测。默认为0.5

4、min_tracking_confidence:目标踪模型的最小置信度值,大于这个数值将被视为已成功跟踪的手部,默认为0.5,如果static_image_mode设置为true,则忽略此操作。

结果输出

results = hands.process(frame)

print(results.multi_handedness)

print(results.multi_hand_landmarks)

results.multi_handedness: 包括label和score,label是字符串"Left"或"Right",score是置信度

results.multi_hand_landmarks: 手部21个关键点的位置信息,包括x,y,z 其中x,y是归一化后的坐标。z代表地标深度,以手腕处的深度为原点,值越小,地标就越靠近相机(我暂时也不清楚啥意思)

视频检测代码

import cv2

import mediapipe as mp

mp_drawing = mp.solutions.drawing_utils

mp_hands = mp.solutions.hands

hands = mp_hands.Hands(

static_image_mode=False,

max_num_hands=2,

min_detection_confidence=0.75,

min_tracking_confidence=0.75)

cap = cv2.VideoCapture(0)

while True:

ret,frame = cap.read()

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# 因为摄像头是镜像的,所以将摄像头水平翻转

# 不是镜像的可以不翻转

frame= cv2.flip(frame,1)

results = hands.process(frame)

frame = cv2.cvtColor(frame, cv2.COLOR_RGB2BGR)

if results.multi_handedness:

for hand_label in results.multi_handedness:

print(hand_label)

if results.multi_hand_landmarks:

for hand_landmarks in results.multi_hand_landmarks:

print('hand_landmarks:' hand_landmarks)

# 关键点可视化

mp_drawing.draw_landmarks(

frame, hand_landmarks, mp_hands.HAND_CONNECTIONS)

cv2.imshow('MediaPipe Hands', frame)

if cv2.waitKey(1) & 0xFF == 27:

break

cap.release()

关键点识别结果

手势识别

通过对检测到的手部关键点之间的角度计算便可以实现简单的手势识别(有局限性),比如计算大拇指向量0-2和3-4之间的角度,它们之间的角度大于某一个角度阈值(经验值)定义为弯曲,小于某一个阈值(经验值)为伸直。

加入手势判别完整代码

import cv2

import mediapipe as mp

import math

def vector_2d_angle(v1,v2):

'''

求解二维向量的角度

'''

v1_x=v1[0]

v1_y=v1[1]

v2_x=v2[0]

v2_y=v2[1]

try:

angle_= math.degrees(math.acos((v1_x*v2_x+v1_y*v2_y)/(((v1_x**2+v1_y**2)**0.5)*((v2_x**2+v2_y**2)**0.5))))

except:

angle_ =65535.

if angle_ , 180.:

angle_ = 65535.

return angle_

def hand_angle(hand_):

'''

获取对应手相关向量的二维角度,根据角度确定手势

'''

angle_list = []

#---------------------------- thumb 大拇指角度

angle_ = vector_2d_angle(

((int(hand_[0][0])- int(hand_[2][0])),(int(hand_[0][1])-int(hand_[2][1]))),

((int(hand_[3][0])- int(hand_[4][0])),(int(hand_[3][1])- int(hand_[4][1])))

)

angle_list.append(angle_)

#---------------------------- index 食指角度

angle_ = vector_2d_angle(

((int(hand_[0][0])-int(hand_[6][0])),(int(hand_[0][1])- int(hand_[6][1]))),

((int(hand_[7][0])- int(hand_[8][0])),(int(hand_[7][1])- int(hand_[8][1])))

)

angle_list.append(angle_)

#---------------------------- middle 中指角度

angle_ = vector_2d_angle(

((int(hand_[0][0])- int(hand_[10][0])),(int(hand_[0][1])- int(hand_[10][1]))),

((int(hand_[11][0])- int(hand_[12][0])),(int(hand_[11][1])- int(hand_[12][1])))

)

angle_list.append(angle_)

#---------------------------- ring 无名指角度

angle_ = vector_2d_angle(

((int(hand_[0][0])- int(hand_[14][0])),(int(hand_[0][1])- int(hand_[14][1]))),

((int(hand_[15][0])- int(hand_[16][0])),(int(hand_[15][1])- int(hand_[16][1])))

)

angle_list.append(angle_)

#---------------------------- pink 小拇指角度

angle_ = vector_2d_angle(

((int(hand_[0][0])- int(hand_[18][0])),(int(hand_[0][1])- int(hand_[18][1]))),

((int(hand_[19][0])- int(hand_[20][0])),(int(hand_[19][1])- int(hand_[20][1])))

)

angle_list.append(angle_)

return angle_list

def h_gesture(angle_list):

'''

# 二维约束的方法定义手势

# fist five gun love one six three thumbup yeah

'''

thr_angle = 65.

thr_angle_thumb = 53.

thr_angle_s = 49.

gesture_str = None

if 65535. not in angle_list:

if (angle_list[0],thr_angle_thumb) and (angle_list[1],thr_angle) and (angle_list[2],thr_angle) and (angle_list[3],thr_angle) and (angle_list[4],thr_angle):

gesture_str = "fist"

elif (angle_list[0]<thr_angle_s) and (angle_list[1]<thr_angle_s) and (angle_list[2]<thr_angle_s) and (angle_list[3]<thr_angle_s) and (angle_list[4]<thr_angle_s):

gesture_str = "five"

elif (angle_list[0]<thr_angle_s) and (angle_list[1]<thr_angle_s) and (angle_list[2],thr_angle) and (angle_list[3],thr_angle) and (angle_list[4],thr_angle):

gesture_str = "gun"

elif (angle_list[0]<thr_angle_s) and (angle_list[1]<thr_angle_s) and (angle_list[2],thr_angle) and (angle_list[3],thr_angle) and (angle_list[4]<thr_angle_s):

gesture_str = "love"

elif (angle_list[0],5) and (angle_list[1]<thr_angle_s) and (angle_list[2],thr_angle) and (angle_list[3],thr_angle) and (angle_list[4],thr_angle):

gesture_str = "one"

elif (angle_list[0]<thr_angle_s) and (angle_list[1],thr_angle) and (angle_list[2],thr_angle) and (angle_list[3],thr_angle) and (angle_list[4]<thr_angle_s):

gesture_str = "six"

elif (angle_list[0],thr_angle_thumb) and (angle_list[1]<thr_angle_s) and (angle_list[2]<thr_angle_s) and (angle_list[3]<thr_angle_s) and (angle_list[4],thr_angle):

gesture_str = "three"

elif (angle_list[0]<thr_angle_s) and (angle_list[1],thr_angle) and (angle_list[2],thr_angle) and (angle_list[3],thr_angle) and (angle_list[4],thr_angle):

gesture_str = "thumbUp"

elif (angle_list[0],thr_angle_thumb) and (angle_list[1]<thr_angle_s) and (angle_list[2]<thr_angle_s) and (angle_list[3],thr_angle) and (angle_list[4],thr_angle):

gesture_str = "two"

return gesture_str

def detect():

mp_drawing = mp.solutions.drawing_utils

mp_hands = mp.solutions.hands

hands = mp_hands.Hands(

static_image_mode=False,

max_num_hands=1,

min_detection_confidence=0.75,

min_tracking_confidence=0.75)

cap = cv2.VideoCapture(0)

while True:

ret,frame = cap.read()

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

frame= cv2.flip(frame,1)

results = hands.process(frame)

frame = cv2.cvtColor(frame, cv2.COLOR_RGB2BGR)

if results.multi_hand_landmarks:

for hand_landmarks in results.multi_hand_landmarks:

mp_drawing.draw_landmarks(frame, hand_landmarks, mp_hands.HAND_CONNECTIONS)

hand_local = []

for i in range(21):

x = hand_landmarks.landmark[i].x*frame.shape[1]

y = hand_landmarks.landmark[i].y*frame.shape[0]

hand_local.append((x,y))

if hand_local:

angle_list = hand_angle(hand_local)

gesture_str = h_gesture(angle_list)

cv2.putText(frame,gesture_str,(0,100),0,1.3,(0,0,255),3)

cv2.imshow('MediaPipe Hands', frame)

if cv2.waitKey(1) & 0xFF == 27:

break

cap.release()

if __name__ == '__main__':

detect()