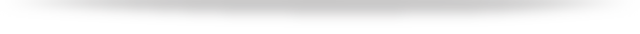

一篇文章教你搞懂日志采集利器 Filebeat

本文使用的Filebeat是7.7.0的版本,文章将从如下几个方面说明:

Filebeat是什么,可以用来干嘛 Filebeat的原理是怎样的,怎么构成的 Filebeat应该怎么玩

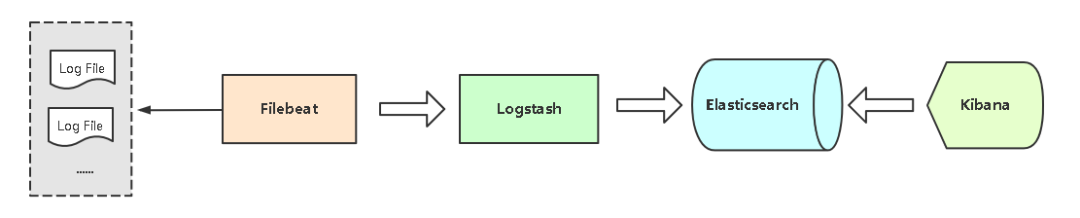

Packetbeat:网络数据(收集网络流量数据) Metricbeat:指标(收集系统、进程和文件系统级别的CPU和内存使用情况等数据) Filebeat:日志文件(收集文件数据) Winlogbeat:Windows事件日志(收集Windows事件日志数据) Auditbeat:审计数据(收集审计日志) Heartbeat:运行时间监控(收集系统运行时的数据)

文件处理程序关闭,如果harvester仍在读取文件时被删除,则释放底层资源。 只有在scan_frequency结束之后,才会再次启动文件的收集。 如果该文件在harvester关闭时被移动或删除,该文件的收集将不会继续。

curl-L-Ohttps://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.7.0-linux-x86_64.tar.gz

tar -xzvf filebeat-7.7.0-linux-x86_64.tar.gz

export #导出

run #执行(默认执行)

test #测试配置

keystore #秘钥存储

modules #模块配置管理

setup #设置初始环境

创建一个存储密码的keystore:filebeat keystore create 然后往其中添加键值对,例如:filebeatk eystore add ES_PWD 使用覆盖原来键的值:filebeat key store add ES_PWD–force 删除键值对:filebeat key store remove ES_PWD 查看已有的键值对:filebeat key store list

output.elasticsearch.password:"${ES_PWD}"

type: log #input类型为log

enable: true #表示是该log类型配置生效

paths: #指定要监控的日志,目前按照Go语言的glob函数处理。没有对配置目录做递归处理,比如配置的如果是:

- /var/log/* /*.log #则只会去/var/log目录的所有子目录中寻找以".log"结尾的文件,而不会寻找/var/log目录下以".log"结尾的文件。

recursive_glob.enabled: #启用全局递归模式,例如/foo/**包括/foo, /foo/*, /foo/*/*

encoding:#指定被监控的文件的编码类型,使用plain和utf-8都是可以处理中文日志的

exclude_lines: ['^DBG'] #不包含匹配正则的行

include_lines: ['^ERR', '^WARN'] #包含匹配正则的行

harvester_buffer_size: 16384 #每个harvester在获取文件时使用的缓冲区的字节大小

max_bytes: 10485760 #单个日志消息可以拥有的最大字节数。max_bytes之后的所有字节都被丢弃而不发送。默认值为10MB (10485760)

exclude_files: ['\.gz$'] #用于匹配希望Filebeat忽略的文件的正则表达式列表

ingore_older: 0 #默认为0,表示禁用,可以配置2h,2m等,注意ignore_older必须大于close_inactive的值.表示忽略超过设置值未更新的

文件或者文件从来没有被harvester收集

close_* #close_ *配置选项用于在特定标准或时间之后关闭harvester。 关闭harvester意味着关闭文件处理程序。 如果在harvester关闭

后文件被更新,则在scan_frequency过后,文件将被重新拾取。 但是,如果在harvester关闭时移动或删除文件,Filebeat将无法再次接收文件

,并且harvester未读取的任何数据都将丢失。

close_inactive #启动选项时,如果在制定时间没有被读取,将关闭文件句柄

读取的最后一条日志定义为下一次读取的起始点,而不是基于文件的修改时间

如果关闭的文件发生变化,一个新的harverster将在scan_frequency运行后被启动

建议至少设置一个大于读取日志频率的值,配置多个prospector来实现针对不同更新速度的日志文件

使用内部时间戳机制,来反映记录日志的读取,每次读取到最后一行日志时开始倒计时使用2h 5m 来表示

close_rename #当选项启动,如果文件被重命名和移动,filebeat关闭文件的处理读取

close_removed #当选项启动,文件被删除时,filebeat关闭文件的处理读取这个选项启动后,必须启动clean_removed

close_eof #适合只写一次日志的文件,然后filebeat关闭文件的处理读取

close_timeout #当选项启动时,filebeat会给每个harvester设置预定义时间,不管这个文件是否被读取,达到设定时间后,将被关闭

close_timeout 不能等于ignore_older,会导致文件更新时,不会被读取如果output一直没有输出日志事件,这个timeout是不会被启动的,

至少要要有一个事件发送,然后haverter将被关闭

设置0 表示不启动

clean_inactived #从注册表文件中删除先前收获的文件的状态

设置必须大于ignore_older+scan_frequency,以确保在文件仍在收集时没有删除任何状态

配置选项有助于减小注册表文件的大小,特别是如果每天都生成大量的新文件

此配置选项也可用于防止在Linux上重用inode的Filebeat问题

clean_removed #启动选项后,如果文件在磁盘上找不到,将从注册表中清除filebeat

如果关闭close removed 必须关闭clean removed

scan_frequency #prospector检查指定用于收获的路径中的新文件的频率,默认10s

tail_files:#如果设置为true,Filebeat从文件尾开始监控文件新增内容,把新增的每一行文件作为一个事件依次发送,

而不是从文件开始处重新发送所有内容。

symlinks:#符号链接选项允许Filebeat除常规文件外,可以收集符号链接。收集符号链接时,即使报告了符号链接的路径,

Filebeat也会打开并读取原始文件。

backoff: #backoff选项指定Filebeat如何积极地抓取新文件进行更新。默认1s,backoff选项定义Filebeat在达到EOF之后

再次检查文件之间等待的时间。

max_backoff: #在达到EOF之后再次检查文件之前Filebeat等待的最长时间

backoff_factor: #指定backoff尝试等待时间几次,默认是2

harvester_limit:#harvester_limit选项限制一个prospector并行启动的harvester数量,直接影响文件打开数

tags #列表中添加标签,用过过滤,例如:tags: ["json"]

fields #可选字段,选择额外的字段进行输出可以是标量值,元组,字典等嵌套类型

默认在sub-dictionary位置

filebeat.inputs:

fields:

app_id: query_engine_12

fields_under_root #如果值为ture,那么fields存储在输出文档的顶级位置

multiline.pattern #必须匹配的regexp模式

multiline.negate #定义上面的模式匹配条件的动作是 否定的,默认是false

假如模式匹配条件'^b',默认是false模式,表示讲按照模式匹配进行匹配 将不是以b开头的日志行进行合并

如果是true,表示将不以b开头的日志行进行合并

multiline.match # 指定Filebeat如何将匹配行组合成事件,在之前或者之后,取决于上面所指定的negate

multiline.max_lines #可以组合成一个事件的最大行数,超过将丢弃,默认500

multiline.timeout #定义超时时间,如果开始一个新的事件在超时时间内没有发现匹配,也将发送日志,默认是5s

max_procs #设置可以同时执行的最大CPU数。默认值为系统中可用的逻辑CPU的数量。

name #为该filebeat指定名字,默认为主机的hostname

#=========================== Filebeat inputs =============================

filebeat.inputs:

# Each - is an input. Most options can be set at the input level, so

# you can use different inputs for various configurations.

# Below are the input specific configurations.

- type: log

# Change to true to enable this input configuration.

enabled: true

# Paths that should be crawled and fetched. Glob based paths.

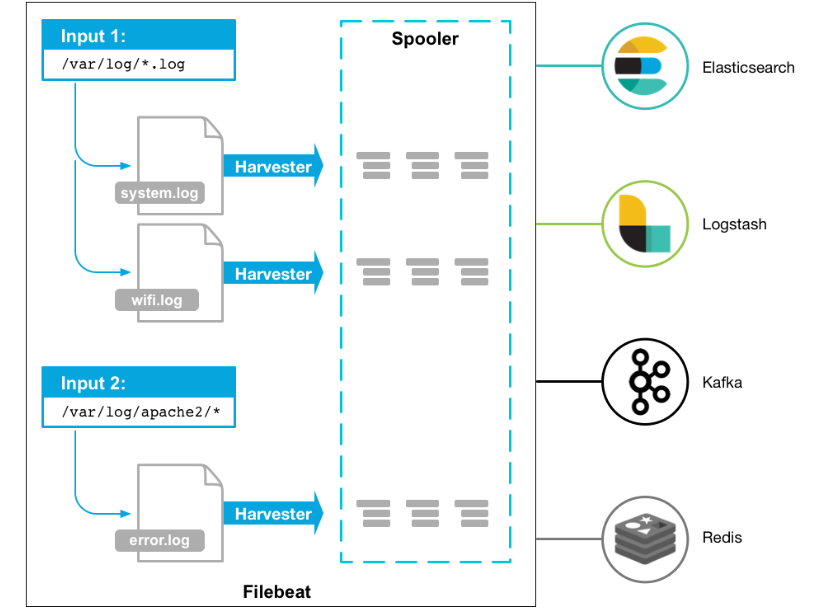

paths: #配置多个日志路径

-/var/logs/es_aaa_index_search_slowlog.log

-/var/logs/es_bbb_index_search_slowlog.log

-/var/logs/es_ccc_index_search_slowlog.log

-/var/logs/es_ddd_index_search_slowlog.log

#- c:\programdata\elasticsearch\logs\*

# Exclude lines. A list of regular expressions to match. It drops the lines that are

# matching any regular expression from the list.

#exclude_lines: ['^DBG']

# Include lines. A list of regular expressions to match. It exports the lines that are

# matching any regular expression from the list.

#include_lines: ['^ERR', '^WARN']

# Exclude files. A list of regular expressions to match. Filebeat drops the files that

# are matching any regular expression from the list. By default, no files are dropped.

#exclude_files: ['.gz$']

# Optional additional fields. These fields can be freely picked

# to add additional information to the crawled log files for filtering

#fields:

# level: debug

# review: 1

### Multiline options

# Multiline can be used for log messages spanning multiple lines. This is common

# for Java Stack Traces or C-Line Continuation

# The regexp Pattern that has to be matched. The example pattern matches all lines starting with [

#multiline.pattern: ^\[

# Defines if the pattern set under pattern should be negated or not. Default is false.

#multiline.negate: false

# Match can be set to "after" or "before". It is used to define if lines should be append to a pattern

# that was (not) matched before or after or as long as a pattern is not matched based on negate.

# Note: After is the equivalent to previous and before is the equivalent to to next in Logstash

#multiline.match: after

#================================ Outputs =====================================

#----------------------------- Logstash output --------------------------------

output.logstash:

# The Logstash hosts #配多个logstash使用负载均衡机制

hosts: ["192.168.110.130:5044","192.168.110.131:5044","192.168.110.132:5044","192.168.110.133:5044"]

loadbalance: true #使用了负载均衡

# Optional SSL. By default is off.

# List of root certificates for HTTPS server verifications

#ssl.certificate_authorities: ["/etc/pki/root/ca.pem"]

# Certificate for SSL client authentication

#ssl.certificate: "/etc/pki/client/cert.pem"

# Client Certificate Key

#ssl.key: "/etc/pki/client/cert.key"

input {

beats {

port => 5044

}

}

output {

elasticsearch {

hosts => ["http://192.168.110.130:9200"] #这里可以配置多个

index => "query-%{yyyyMMdd}"

}

}

###################### Filebeat Configuration Example #########################

# This file is an example configuration file highlighting only the most common

# options. The filebeat.reference.yml file from the same directory contains all the

# supported options with more comments. You can use it as a reference.

#

# You can find the full configuration reference here:

# https://www.elastic.co/guide/en/beats/filebeat/index.html

# For more available modules and options, please see the filebeat.reference.yml sample

# configuration file.

#=========================== Filebeat inputs =============================

filebeat.inputs:

# Each - is an input. Most options can be set at the input level, so

# you can use different inputs for various configurations.

# Below are the input specific configurations.

- type: log

# Change to true to enable this input configuration.

enabled: true

# Paths that should be crawled and fetched. Glob based paths.

paths:

-/var/logs/es_aaa_index_search_slowlog.log

-/var/logs/es_bbb_index_search_slowlog.log

-/var/logs/es_ccc_index_search_slowlog.log

-/var/logs/es_dddd_index_search_slowlog.log

#- c:\programdata\elasticsearch\logs\*

# Exclude lines. A list of regular expressions to match. It drops the lines that are

# matching any regular expression from the list.

#exclude_lines: ['^DBG']

# Include lines. A list of regular expressions to match. It exports the lines that are

# matching any regular expression from the list.

#include_lines: ['^ERR', '^WARN']

# Exclude files. A list of regular expressions to match. Filebeat drops the files that

# are matching any regular expression from the list. By default, no files are dropped.

#exclude_files: ['.gz$']

# Optional additional fields. These fields can be freely picked

# to add additional information to the crawled log files for filtering

#fields:

# level: debug

# review: 1

### Multiline options

# Multiline can be used for log messages spanning multiple lines. This is common

# for Java Stack Traces or C-Line Continuation

# The regexp Pattern that has to be matched. The example pattern matches all lines starting with [

#multiline.pattern: ^\[

# Defines if the pattern set under pattern should be negated or not. Default is false.

#multiline.negate: false

# Match can be set to "after" or "before". It is used to define if lines should be append to a pattern

# that was (not) matched before or after or as long as a pattern is not matched based on negate.

# Note: After is the equivalent to previous and before is the equivalent to to next in Logstash

#multiline.match: after

#============================= Filebeat modules ===============================

filebeat.config.modules:

# Glob pattern for configuration loading

path: ${path.config}/modules.d/*.yml

# Set to true to enable config reloading

reload.enabled: false

# Period on which files under path should be checked for changes

#reload.period: 10s

#==================== Elasticsearch template setting ==========================

#================================ General =====================================

# The name of the shipper that publishes the network data. It can be used to group

# all the transactions sent by a single shipper in the web interface.

name: filebeat222

# The tags of the shipper are included in their own field with each

# transaction published.

#tags: ["service-X", "web-tier"]

# Optional fields that you can specify to add additional information to the

# output.

#fields:

# env: staging

#cloud.auth:

#================================ Outputs =====================================

#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch:

# Array of hosts to connect to.

hosts: ["192.168.110.130:9200","92.168.110.131:9200"]

# Protocol - either `http` (default) or `https`.

#protocol: "https"

# Authentication credentials - either API key or username/password.

#api_key: "id:api_key"

username: "elastic"

password: "${ES_PWD}" #通过keystore设置密码

#============================== Kibana =====================================

# Starting with Beats version 6.0.0, the dashboards are loaded via the Kibana API.

# This requires a Kibana endpoint configuration.

setup.kibana:

# Kibana Host

# Scheme and port can be left out and will be set to the default (http and 5601)

# In case you specify and additional path, the scheme is required: http://localhost:5601/path

# IPv6 addresses should always be defined as: https://[2001:db8::1]:5601

host: "192.168.110.130:5601" #指定kibana

username: "elastic" #用户

password: "${ES_PWD}" #密码,这里使用了keystore,防止明文密码

# Kibana Space ID

# ID of the Kibana Space into which the dashboards should be loaded. By default,

# the Default Space will be used.

#space.id:

#================================ Outputs =====================================

# Configure what output to use when sending the data collected by the beat.

#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch:

# Array of hosts to connect to.

hosts: ["192.168.110.130:9200","192.168.110.131:9200"]

# Protocol - either `http` (default) or `https`.

#protocol: "https"

# Authentication credentials - either API key or username/password.

#api_key: "id:api_key"

username: "elastic" #es的用户

password: "${ES_PWD}" # es的密码

#这里不能指定index,因为我没有配置模板,会自动生成一个名为filebeat-%{[beat.version]}-%{+yyyy.MM.dd}的索引

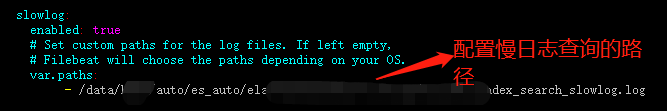

cd filebeat-7.7.0-linux-x86_64/modules.d

./filebeat modules elasticsearch

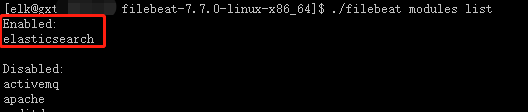

./filebeat modules list

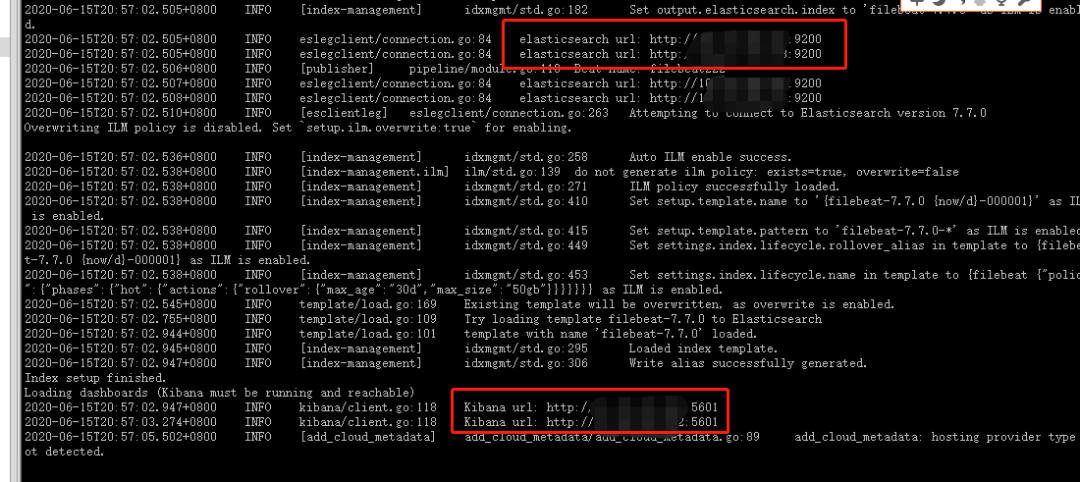

./filebeat setup -e

./filebeat -e

来源:cnblogs.com/zsql/p/13137833.html

版权申明:内容来源网络,版权归原创者所有。除非无法确认,我们都会标明作者及出处,如有侵权烦请告知,我们会立即删除并表示歉意。谢谢!

评论