【NLP】基于TF-IDF和KNN的模糊字符串匹配优化

编译 | VK

来源 | Towards Data Science

研究表明,94%的企业承认有重复的数据,而且这些重复的数据大部分是不完全匹配的,因此通常不会被发现

什么是模糊字符串匹配?

随着数据的增长,对速度的需求也在增长。

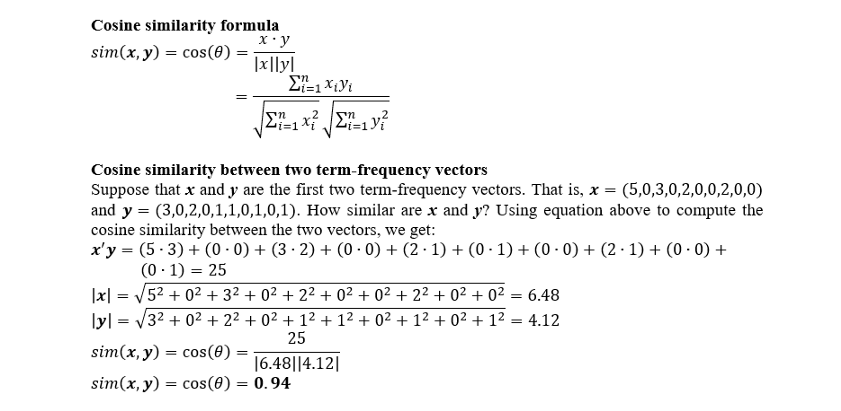

TF-IDF和KNN如何加速计算时间?

1-gram

['independence']

2-gram

['in','nd','de','ep','pe','en','nd','de','en','nc','ce']

3-gram

['ind','nde','dep','epe','pen','end','nde','den','enc','nce']

让我们来练习一下

# 导入数据操作模块

import pandas as pd

# 导入线性代数模块

import numpy as np

# 导入模糊字符串匹配模块

from fuzzywuzzy import fuzz, process

# 导入正则表达式模块

import re

# 导入迭代模块

import itertools

# 导入函数开发模块

from typing import Union, List, Tuple

# 导入TF-IDF模块

from sklearn.feature_extraction.text import TfidfVectorizer

# 导入余弦相似度模块

from sklearn.metrics.pairwise import cosine_similarity

# 导入KNN模块

from sklearn.neighbors import NearestNeighbors

# 字符串预处理

def preprocess_string(s):

# 去掉字符串之间的空格

s = re.sub(r'(?<=\b\w)\s*[ &]\s*(?=\w\b)', '', s)

return s

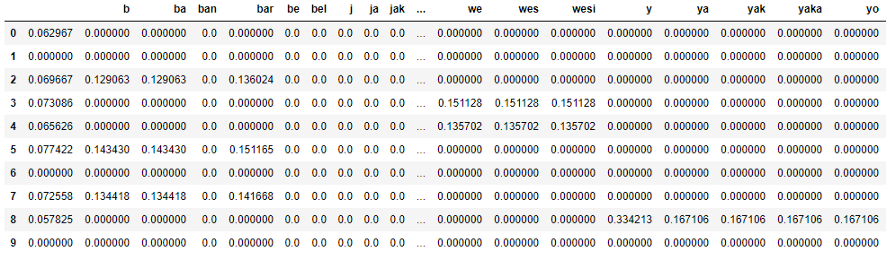

# 字符串匹配- TF-IDF

def build_vectorizer(

clean: pd.Series,

analyzer: str = 'char',

ngram_range: Tuple[int, int] = (1, 4),

n_neighbors: int = 1,

**kwargs

) -> Tuple:

# 创建vectorizer

vectorizer = TfidfVectorizer(analyzer = analyzer, ngram_range = ngram_range, **kwargs)

X = vectorizer.fit_transform(clean.values.astype('U'))

# 最近邻

nbrs = NearestNeighbors(n_neighbors = n_neighbors, metric = 'cosine').fit(X)

return vectorizer, nbrs

# 字符串匹配- KNN

def tfidf_nn(

messy,

clean,

n_neighbors = 1,

**kwargs

):

# 拟合干净的数据和转换凌乱的数据

vectorizer, nbrs = build_vectorizer(clean, n_neighbors = n_neighbors, **kwargs)

input_vec = vectorizer.transform(messy)

# 确定可能的最佳匹配

distances, indices = nbrs.kneighbors(input_vec, n_neighbors = n_neighbors)

nearest_values = np.array(clean)[indices]

return nearest_values, distances

# 字符串匹配-匹配模糊

def find_matches_fuzzy(

row,

match_candidates,

limit = 5

):

row_matches = process.extract(

row, dict(enumerate(match_candidates)),

scorer = fuzz.token_sort_ratio,

limit = limit

)

result = [(row, match[0], match[1]) for match in row_matches]

return result

# 字符串匹配- TF-IDF

def fuzzy_nn_match(

messy,

clean,

column,

col,

n_neighbors = 100,

limit = 5, **kwargs):

nearest_values, _ = tfidf_nn(messy, clean, n_neighbors, **kwargs)

results = [find_matches_fuzzy(row, nearest_values[i], limit) for i, row in enumerate(messy)]

df = pd.DataFrame(itertools.chain.from_iterable(results),

columns = [column, col, 'Ratio']

)

return df

# 字符串匹配-模糊

def fuzzy_tf_idf(

df: pd.DataFrame,

column: str,

clean: pd.Series,

mapping_df: pd.DataFrame,

col: str,

analyzer: str = 'char',

ngram_range: Tuple[int, int] = (1, 3)

) -> pd.Series:

# 创建vectorizer

clean = clean.drop_duplicates().reset_index(drop = True)

messy_prep = df[column].drop_duplicates().dropna().reset_index(drop = True).astype(str)

messy = messy_prep.apply(preprocess_string)

result = fuzzy_nn_match(messy = messy, clean = clean, column = column, col = col, n_neighbors = 1)

# 混乱到干净

return result

# 导入计时模块

import time

# 加载数据

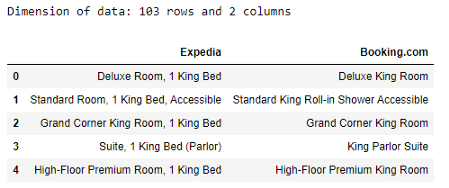

df = pd.read_csv('room_type.csv')

# 检查数据的维度

print('Dimension of data: {} rows and {} columns'.format(len(df), len(df.columns)))

# 打印前5行

df.head()

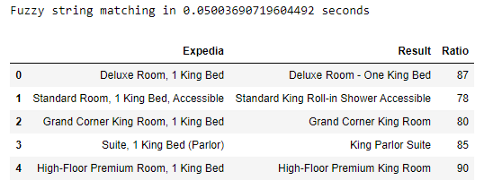

# 运行模糊字符串匹配算法

start = time.time()

df_result = (df.pipe(fuzzy_tf_idf, # 函数和混乱的数据

column = 'Expedia', # 混乱数据列

clean = df['Booking.com'], # 主数据(列表)

mapping_df = df, # 主数据

col = 'Result') # 可以自定义

)

end = time.time()

# 打印计算时间

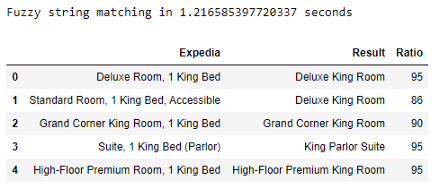

print('Fuzzy string matching in {} seconds'.format(end - start))

# 查看模糊字符串匹配的结果

df_result.head()

与Levenshtein 距离的比较

# 导入数据操作模块

import pandas as pd

# 导入线性代数模块

import numpy as np

# 导入模糊字符串匹配模块

from fuzzywuzzy import fuzz, process

# 导入二分搜索模块

def stringMatching(

df: pd.DataFrame,

column: str,

clean: pd.Series,

mapping_df: pd.DataFrame,

col: str

):

# 创建vectorizer

categoryClean = clean.drop_duplicates().reset_index(drop = True)

categoryMessy = df[column].drop_duplicates().dropna().reset_index(drop = True).astype(str)

categoryFuzzy = {}

ratioFuzzy = {}

for i in range(len(categoryMessy)):

resultFuzzy = process.extractOne(categoryMessy[i].lower(), categoryClean)

# 映射

catFuzzy = {categoryMessy[i]:resultFuzzy[0]}

ratFuzzy = {categoryMessy[i]:resultFuzzy[1]}

# 保存结果

categoryFuzzy.update(catFuzzy)

#保存比率

ratioFuzzy.update(ratFuzzy)

# 创建列名

catCol = col

ratCol = 'Ratio'

# 合并结果

df[catCol] = df[column]

df[ratCol] = df[column]

# 映射结果

df[catCol] = df[catCol].map(categoryFuzzy)

df[ratCol] = df[ratCol].map(ratioFuzzy)

return df

# 运行模糊字符串匹配算法

start = time.time()

df_result = (df.pipe(stringMatching, # 函数和混乱的数据

column = 'Expedia', # 混乱数据列

clean = df['Booking.com'], # 主数据

mapping_df = df, # 主数据

col = 'Result') # 可以自定义

)

end = time.time()

# 打印计算时间

print('Fuzzy string matching in {} seconds'.format(end - start))

# 查看模糊字符串匹配的结果

df_result.head()

结论

参考文献

往期精彩回顾

本站qq群851320808,加入微信群请扫码:

评论